Adaptation or Assimilation? Sequential Effects in the Perception of Dynamic and Static Facial Expressions of Emotion

Published: June 24, 2018

Latest article update: Aug. 23, 2022

Abstract

In emotional facial expressions, sequential effects can result in perceptual changes of a briefly presented test expression due to a preceding prolonged exposure to an adaptor expression. Most studies have shown contrastive (adaptation) aftereffects using static adaptors: a test is perceived as less similar to the adaptor. However, the existence and sign of sequential effects caused by dynamic information in the adaptors are controversial. In a behavioral experiment we tested the influence of realistic (recorded from an actors face) and artificial (linearly morphed) dynamic transitions between happy and disgusted facial expressions, and of static images of these expressions at their peak, on the perception of ambiguous images perceived by individual participants as 50 % happy and 50 % disgusted. Adaptors (1210 ms each) were repeated four times before presenting the test stimulus (50 ms). Contrary to static prototypical expressions, which revealed contrastive aftereffects, both realistic and morphed transitions led to assimilative effects: ambiguous expressions were perceived and categorized more often as the emotion depicted at the end of the dynamic adaptor. We found no evidence in favor of or against the influence of the linear or non-linear nature of the dynamic adaptors. The results indicate that adaptation to static and dynamic facial information might have dissociated mechanisms. We discuss possible explanations of the results by comparing them to studies of representational momentum and to studies of sequential aftereffects in lower-level dynamic visual stimuli, which showed the change of an aftereffect sign due to shorter or longer adaptation times.

Keywords

Assimilation, emotion, facial expression, representational momentum, adaptation, dynamic face, motion aftereffect

Introduction

The phenomenon of perceptual adaptation is a particular type of contextual influence that one object — the adaptor — can have on another object of the same kind, if they are presented in sequence. A prolonged (at least several seconds) exposure to an adaptor with a high level of a particular characteristic affects the perception of a subsequent briefly presented ambiguous test stimulus, so that its evaluation in terms of the same characteristic changes due to the influence of the adaptor. The procedure itself is also referred to as “adaptation”. In a constantly changing environment, adaptation helps to recalibrate our perception, detect even small deviations from the average context, or keep a representation of an object constant.

In psychophysical studies, two types of sequential effects of adaptation have been described (Palumbo, Ascenzo, & Tommasi, 2017). The first one is the “assimilative” effect, which causes a stimulus to be perceived as more similar to the preceding adaptor than when it is presented alone or after an adaptor from a different category. The second one is the “contrastive” effect that leads to a stimulus being perceived as less similar to the preceding adaptor. As the latter occurs more often, the term “adaptation” is often used as a synonym for contrastive sequential effects only.

Apart from only a few exceptions (Furl et al., 2010; Hsu & Yang, 2013), it is the contrastive effect of adaptation that was revealed in a number of studies of facial emotional expression recognition. Most of them used static images of intense (prototypical) expressions as adaptors, and static images of ambiguous expressions or neutral faces as test stimuli (Butler, Oruc, Fox, & Barton, 2008; Cook, Matei, & Johnston, 2011; Ellamil, Susskind, & Anderson, 2008; Hsu & Young, 2004; Juricevic & Webster, 2012; Leopold, Rhodes, Muller, & Jeffery, 2005). To explain the mechanisms underlying static face adaptation, two models have been proposed: norm-based coding and prototype-based coding. The first model suggests that different facial attributes such as facial identity, age or gender are coded in the opponent manner in relation to an “average” (or “norm”) face that is located in the center of a “face space” (Jeffery et al., 2011; Rhodes & Leopold, 2011). The dimensions of this space would represent various facial configurations that allow us to distinguish people from each other. The same model is also used to explain the contrastive facial expression aftereffects, which can be observed after adaptation to artificially construed anti-expressions (Cook et al., 2011; Skinner & Benton, 2010). But recently it has been shown that anti-expressions probably have a different status compared to realistic expressions and do not represent natural categories (Matera, Gwinn, O’Neil, & Webster, 2016), so their use in adaptation studies might lead to lower ecological validity.

According to the other model, each facial expression is represented as a distinct category, or prototype, with only minor influences of other categories when they are used as adaptors (Juricevic & Webster, 2012), or with asymmetric interactions between these categories (Hsu & Young, 2004; Korolkova, 2017; Pell & Richards, 2011; Rutherford, Chat- tha, & Krysko, 2008). It is suggested therefore that adaptation reduces the sensitivity of the observer to the features of only this category, so the features of any other emotional category in the test stimuli become more salient.

The adaptation effects cannot be explained exclusively by low-level modality-specific aftereffects, but involve higher-level processing: for example, there is at least a partial transfer of aftereffects from one facial identity to another (Ellamil et al., 2008; Fox & Barton, 2007) as well as between different viewpoints of the same face (Benton et al., 2007). In addition, several recent studies have found evidence for crossmodal emotional adaptation between facial and vocal expressions of emotion (Pye & Bestelmeyer, 2015; Skuk & Schweinberger, 2013; Wang et al., 2017; Watson et al., 2014), and between tactile and facial ones (Mat- sumiya, 2013), suggesting that an amodal evaluation of emotion might shift the decision criteria used to judge the expression (Storrs, 2015).

The results obtained on static faces, as well as the underlying mechanisms, are often generalized to explain our perception of faces in real life. Nonetheless, to date, only a limited number of studies have explored the adaptation effects in dynamic faces, and it is still not fully understood whether they share the same mechanisms as static face aftereffects or not. Since in real life we perceive faces that are constantly moving (such as those of our communication partners), it may be more ecologically relevant to explore the sequential effects of dynamic facial expressions as well. In the present study we aimed at a deeper understanding of the features of dynamic face aftereffects and at broadening our knowledge of face perception in general.

An early unpublished study that compared the perceptual adaptation to static and dynamic facial expressions found no aftereffects with the dynamic adaptors (Dube, 1997). The existence of two separate systems was suggested: the first one analyzes dynamic information and is probably updated more frequently than the second one, which analyzes static information. Thus the former may be less prone to contextual influences than the latter. As an explanation of the obtained results, it was also suggested that there may be a lack of information transfer between the two systems, and although there were no aftereffects with the use of dynamic adaptors and static tests, contextual influences might be possible when both the adaptor and test are static, or when both are dynamic.

In line with this suggestion, several papers introduced dynamic test stimuli. In one study, low-intensity dynamic expressions of happiness and disgust were categorized after adaptation to dynamic or static “anti-expressions” compared to a baseline condition of a dynamic (rigid head motion only) or static neutral face (Curio, Giese, Breidt, Kleiner, & Bülthoff, 2010). All expressions were 3D-mod- eled avatars based on a motion-captured actors facial expressions starting from neutral, then reaching its peak intensity, and finally decreasing back to the neutral state. However, contrary to the prediction previously made by Dube, the results showed that compared to adaptation to a neutral face, adaptation to both static and dynamic antiexpressions equally increases the recognition rate of the low-intensity dynamic tests (Curio et al., 2010, Experiment 1). When the same anti-expressions were presented with their frame order reversed in time, they produced an aftereffect of the same size as in non-reversed adaptors (Curio et al., 2010, Experiment 2), therefore suggesting the independence of facial expression aftereffects from the dynamic organization of the adaptor.

Another study used the same 3D model to animate the avatars, but the adaptors started with a neutral expression and proceeded to a peak-intensity happy or disgusted expression (de la Rosa, Giese, Bülthoff, & Curio, 2013). The adaptation effect to these dynamic stimuli was significantly lower compared to static images, while test stimuli were frame-by-frame morphs of the two dynamic adaptors (happy and disgusted). As this study also varied the availability of rigid head motion and showed that it can modulate the size of the adaptation effect, it was suggested that both static and dynamic types of information are important for the aftereffects.

The hypothesis of separate mechanisms of adaptation to static and dynamic information in facial expressions, which could accumulate to produce a larger effect in dynamic faces, has been explicitly tested (Korolkova, 2015, Experiment 1). This study did not reveal any additional aftereffects due to the dynamic organization of facial expressions compared to static images. In particular, when participants were adapted for 5 seconds to static images of intense happy or sad expressions, the subsequently presented ambiguous static expressions derived from transitions between happiness and sadness were biased away from the adaptor, which is consistent with other static-face adaptation studies. Adapting to dynamic stimuli, which started from an ambiguous expression and then developed towards prototypical happiness or sadness, led to an adaptation effect of the same size as in static adaptors. However, in the case of dynamic transitions between happy and sad displays presented as adaptors, no aftereffects were observed at all; that is, the perception of subsequently shown static facial expressions did not change depending on the previously displayed transitions. This lack of influence of dynamic organization of the adaptor was consistent with the study by Curio et al. (2010). In a follow-up experiment, the time of adaptation to dynamic transitions was prolonged to 10 seconds (Korolkova, 2015, Experiment 2); in this case a contrastive aftereffect was found, and it was suggested to occur due to static (configural) information of the emotion, and not due to its dynamic properties.

In sum, the debate about the influence of dynamic information on facial emotional expression aftereffects is not resolved. One possible limitation of a previous study (Korolkova, 2015) was that the natural speed of facial dynamics was substantially changed to make the adaptors long enough. According to other studies, at least one second of exposure to a static peak expression is necessary to obtain the contrastive aftereffect (Burton, Jeffery, Bonner, & Rhodes, 2016), and adapting for about 5 seconds consistently leads to an above-chance effect both for static and dynamic adaptors (de la Rosa et al., 2013; Hsu & Young, 2004). To achieve this adaptation time, in the previous study the dynamic transitions were presented 5 times slower than the original recordings of the facial displays, while the speed of the dynamic expressions starting from an ambiguous display was up to 19.5 times slower (Korolkova, 2015). This speed alteration could have made the adaptors highly unnatural, which could affect their perception and influence on the subsequent static images. Therefore in the present study, to reduce the impact of this unnaturalness, we adopted another paradigm (Curio et al., 2010; de la Rosa et al., 2013) that allowed us to achieve the overall prolonged adaptation time by presenting several identical dynamic adaptors in a row without significant speed alterations.

Another factor that can possibly influence the existence of aftereffects is the qualitative characteristic of the facial dynamics in the adaptor. In previous studies, either 3D avatars based on actors’ motion capture were used as adaptors (Curio et al., 2010; de la Rosa et al., 2013), or the adaptors were video recordings of actors’ faces (Dube, 1997; Korolkova, 2015,2017). However, evidence has emerged that the recognition of emotions from realistic (based on non-linear face movements) and artificial (linearly morphed) dynamic facial displays can differ (Cosker, Krumhuber, & Hilton, 2015; Dobs et al., 2014; Korolkova, 2018; Krumhuber & Scherer, 2016), and therefore it is possible that adaptation aftereffects to these types of stimuli might also be different. In particular, presenting morphed adaptors with smooth linear transitions might update the dynamic system less frequently than natural movement, which can have different speeds in different face regions, and thus morphed adaptors might lead to a higher adaptation effect.

In the current study we explored the adaptation to static facial expressions and the dynamic transitions between them, both realistic and artificial. We expected contrastive aftereffects after adaptation to static prototypical expressions; the static-adaptor conditions served as a baseline, to which the dynamic-adaptor conditions were compared. In the case of dynamic adaptors, there could be no effect of adaptation, which would be similar to Experiment 1 in a previous study with video recordings (Korolkova, 2015), or a contrastive effect, as has been found in studies using artificial avatars (Curio et al., 2010; de la Rosa et al., 2013). The lack of aftereffect in the case of dynamic adaptors but its presence in the static adaptors would indicate dissociated mechanisms for the two conditions, with the prevalence of low-level visual processing in the case of static adaptors. If, on the other hand, the adaptation aftereffect is found in both static and dynamic adaptors, the impact of higher-level processing and emotion judgments might be more important for producing the aftereffects. Finally, we included the factor of natural or artificial dynamics in order to compare the aftereffects after adaptation to video recordings and to linear dynamic morphing. If naturalness/linearity is important for adaptation, we expected a difference in the aftereffects; otherwise, if it does not play any role in adaptation, the aftereffects for both video recordings and morphing would be similar. As traditional frequentist methods of hypotheses testing cannot provide evidence in favor of no differences between conditions (Ho), we used Bayes factors as an additional measure to compare the aftereffects between three types of adaptors, as well as in each of them.

Materials and Method

Participants

Eighty-seven people participated in the study. Among them, 30 participants (20 females, 10 males, ages 17-58, median age 20 years) were included in Group 1 (adaptation to video recordings); 30 participants (21 females, 9 males, ages 17-37, median age 18.5 years) were included in Group 2 (adaptation to morphed transitions); and

27 participants (20 females, 7 males, ages 17-23, median age 19 years) were included in Group 3 (adaptation to static images). All participants had normal or corrected-to-nor- mal vision. The study was conducted in accordance with the Code of Ethics of the World Medical Association (Declaration of Helsinki).

Stimuli

Stimuli were based on video recordings of a female poser who showed transitions between prototypical happy and disgusted expressions on her face. Although happiness and disgust are not considered opponent emotions — according to other studies, the opposite of happiness is sadness, but there is no clear opposite to disgust (Hsu & Young, 2004; Korolkova, 2017; Rutherford et al., 2008) — we selected these two expressions to be able to compare the results of the current study to previous ones that also explored the adaptation to dynamic expressions (Curio et al., 2010; de la Rosa et al., 2013). The overall length of the experiment allowed us to present only the transition between one pair of emotions, although further studies should extend the range of transitions used as dynamic adaptors, as has been done previously with a wider range of static and dynamic basic emotions (Korolkova, 2017; Rutherford et al., 2008). We presented recordings of only one female poser, because of the lack of large and readily available datasets of naturalistic dynamic transitions between basic emotions. This may prevent the results of the current study from being generalized to other emotions and posers.

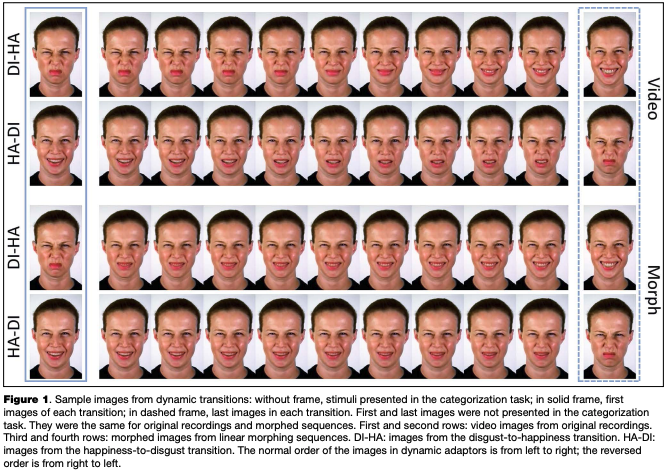

The technical details of the expression recording procedure have been described elsewhere (Korolkova, 2017). Briefly, the poser was asked to practice performing basic expressions and the transitions between them before the recording session. She was provided with sample images of intense expressions during the recording session and was asked to look straight into the camera placed in front of her, and to avoid blinking during posing. Her face was recorded continuously at a speed of 120 frames per second. After the recording session, the transitions between emotions were cropped out of the recording so that each transition started with one of the two intense expressions (either happiness or disgust) and then dynamically changed to the other intense expression (disgust or happiness). All frames of the video clips were rotated to correct for head tilt and cropped to 370x600 pixels. Each resulting clip lasted 1 second (121 frames). In addition we used linear morphing software (Abrosoft FantaMorf 3.0) to prepare artificial dynamic transitions between the first and the last frames of each video clip. The number of frames, frame rate and linear dimensions of each image in the morphing sequences were the same as in the corresponding video clips. Sample frames from both transitions, including the first and last frames and intermediate frames used in the categorization task (see below), are shown in Figure 1

The dynamic transitions were presented with normal and reversed order of frames. In the case of a contrastive aftereffect, we expected that after adaptation to a dynamic transition with the normal frame order, the perception of an ambiguous static expression would be biased away from the emotion at the end of the adaptor, compared to adaptation to the same but time-reversed transition. That would mean that the expression at the end of the transition acts

similarly to a static adaptor, and there are no influences of previously shown dynamic changes on the perception of the test stimulus.

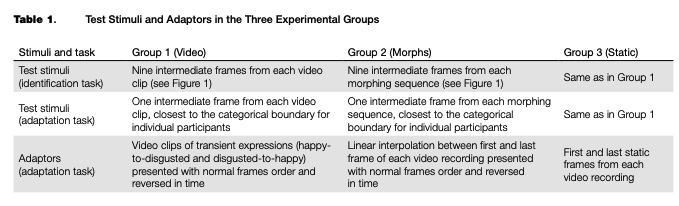

Each participant completed two tasks in sequence: a categorization task and an adaptation task (described in detail in Design and Procedure section below). The categorization task included presentation of test stimuli, and the adaptation task included presentation of adaptors and test stimuli. Adaptors and tests differed between the three experimental groups (see Table 1 for a summary). In Group 1, test stimuli in the adaptation and categorization tasks were intermediate static frames derived from each video clip, and adaptors were dynamic video clips presented to the participants either with the normal frame order or with the order of frames reversed in time. In Group 2, the test stimuli were static frames derived from morphing sequences, and adaptors were dynamically presented morphing sequences. As well as in the Group 1, the adaptors were shown with normal and reversed order of images: the normal-order morphing adaptors were the sequences starting from the first frames of the video clips and then morphed towards the end, while the reversed- order morphing adaptors were the sequences starting from the last frames of the video clips and morphed towards the start. In Group 3, the test stimuli were intermediate frames from the video clips (exactly the same as in Group 1), and adaptors were the first and the last frames of each video clip presented as static images.

Apparatus

The experimental procedure was programmed using PXLab — a collection of Java classes for programming psychophysical experiments (Irtel, 2007) with modifications by Alexander Zhegallo (Zhegallo, 2016). Static and dynamic faces were presented on a ViewSonic G90f 17” CRT display (vertical refresh rate 100 Hz, 1024 x 768 pixels), and at a distance of 60 cm they subtended a visual angle of 16° x 20°.

Design and Procedure

The study had mixed factorial design with one between- group factor, Stimuli type (video clips, linear morphs or static images), and two within-subject factors: Transition (corresponding to the change of expression displayed by the poser: happiness-to-disgust or disgust-to-happiness) and Frame order (normal, ending with the last frame of original video recordings, or reversed, ending with the first frame of original recordings). In Group 3, where only static images were presented as adaptors, a “normal-order” adaptor was the last frame of the transition, and a “reversed- order” adaptor was its first frame. For example, the normalorder adaptors of three different types derived from the original happiness-to-disgust transition were the following: happiness-to-disgust video recording in Group 1; linear morphing sequence from happiness to disgust in Group 2; and static image of disgust in Group 3.

The experimental procedure was the same in the three Groups and included two tasks, completed sequentially by each participant. The first task was categorization of static intermediate images between happy and disgusted expressions, and the second task was perceptual adaptation to expressions.

In the categorization task, nine intermediate images from each of the two transitions (happiness-to-disgust and disgust-to-happiness) were presented one at a time on a light-gray background until response, separated by a 700- ms black central fixation cross. Images were derived from the middle part of the video clips (Groups 1 and 3) or from the morphing sequences (Group 2). They were separated by 5 frames (1/24 second) of the original video recordings, and by the same 5 images in the morphing sequences. For each transition, the range of the stimuli was based on the results of a pilot experiment to ensure that ambiguous expressions (50 % “happy” responses and 50 % “disgusted” responses), as well as those perceived unambiguously as happiness or disgust, were included. The participants were asked to watch the stimuli and to press the left arrow button on a standard keyboard, as fast as possible, if the presented image looked more like a happy face, and to press the right arrow button if it looked more like a disgusted face. Each image was repeated 20 times (360 trials in total, fully randomized). Presentation time was unlimited (until response). Two additional practice trials with the last frame of each sequence were presented before the main experiment.

Based on the responses collected in the categorization task, psychometric functions were fitted individually for each participant (see Data Analysis section below). In each of the two transitions, an ambiguous image closest to the point of subjective equivalence (50 % happy /50% disgusted) was extracted to serve as a test stimulus in the subsequent adaptation task. Note that the 50 % happy / 50 % disgusted images were estimated based on the psychometric functions and were not necessarily presented to the participants during the categorization task.

In the adaptation task each trial included the following: 500-ms fixation cross; one to five adaptors (each was shown for 1210 ms followed by a blank screen for 300 ms); test stimulus outlined by a thin white frame (50 ms); blank screen (300 ms); invitation to make a response as to whether the image in the white frame looked more like a happy or disgusted face. We varied the number of adaptors to maintain the participants’ attention and to make the exact moment of test stimuli presentation less predictable. Main trials included four adaptors, same as in an earlier study (de la Rosa et al., 2013), and distractor trials included 1,2,3 or 5 adaptors. Using four adaptors in a row allowed for a longer overall adaptation time, while the speed of the transitions was only 20 % lower than in the original recordings. This speed adjusting was necessary because the frame rate of the video recordings (120 frames/second) was higher than the refresh rate of the monitors we used (100 frames/ second), and we wanted to ensure that every frame of the original recordings would be shown for the same duration (10 ms). The distractor trials with each number of adaptors were counterbalanced between the emotional transitions and were distributed uniformly over the four blocks of the task. For each transition we used only one test stimulus, corresponding to the individual categorical boundary for this participant; therefore, the test stimuli varied between all participants. For each of the two transitions, the main trials with adaptors presented in normal and reversed frame orders were repeated 28 times each. In total, there were 128 trials (16 distractors and 112 main trials) divided into four blocks with self-paced pauses between the blocks. Within each block, the main trials and distractors were randomized. Four additional practice trials (distractors only) were presented before the main experiment.

Data Analysis

The data were analyzed using the R language of statistical analysis, Version 3.4.2 (R Core Team, 2016).

The data from the categorization task for each participant were first filtered to exclude trials in which response times were lower than 100 ms or higher than 10 seconds. The filtered data were used to fit individual psychometric curves using the quickpsy package, Version 0.1.4 (Linares & Ldpez-Moliner, 2016), and to estimate the categorical boundary between happy and disgusted expressions for each participant. The psychometric curves were of the following type:

i|/(x) = y + (1 -y)xf(x),

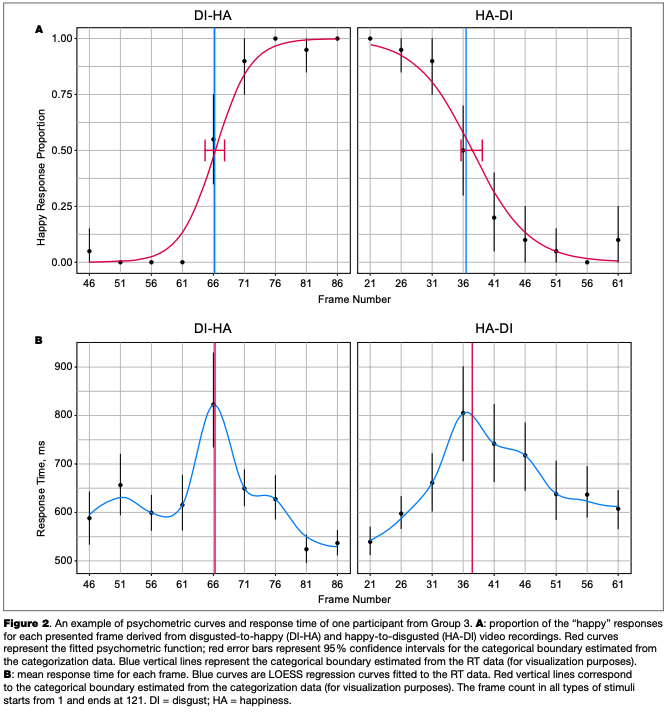

where у is the baseline of random responses (set as .5), x is the position of the intermediate frame in the video clip or morphing sequence (further referred to as frame number), and /is the sigmoidal function with asymptotes 0 and 1. In addition we smoothed the response time (RT) data with LOESS (non-parametric local regression) curves with 50 % smoothing to estimate the frame number with the highest RT. This measure served as an additional estimate of the categorical boundary, at which observers tend to respond slower than when they categorize the images falling within one of the categories. For each transition and each stimuli type (corresponding to video, morph and static adaptors), we calculated FDR-corrected paired /-tests between the boundary estimates from the categorization data and from the RT data.

Adaptation data were analyzed with mixed-effects logistic regression using the lme4 package, Version 1.1-15 (Bates, Mächler, Bolker, & Walker, 2015). The dependent variable was the test stimuli categorization (happiness or disgust). The fixed factors were Transition, Frame order, Stimuli type, and their interactions. The model included a random intercept for each subject, but did not include the overall intercept. We also modeled a random slope for the frame that corresponds to the individual categorical boundary for each subject. In the formula notation used in lme4, the regression model was the following:

Response ~0+Transition x Stimuli Type x Frame Order+ (11 Subject)+(0+Boundary | Subject),

where Response is the dependent categorical variable (“disgust” or “happiness”); 0 represents exclusion of the overall intercept; Transition x Stimuli Type x Frame Order are fixed factors and their two- and three-way interactions; (1 | Subject) is random intercept for each subject and (0 + Boundary I Subject) is the random slope for each subjects categorical boundaries.

The factors were sequentially added to the null model (with random intercept only), and the deviance for the pairs of models (with and without each factor) was tested using Pearsons y2. The deviance was calculated as -2(/1-/0), where l0 and /, are maxima of log-likelihood for the model with the factor (/,) and without it (/0). For each combination of the fixed factors, confidence intervals were computed using nonparametric bootstrap (1000 permutations). Akaike Information Criterion (AIC) was also computed.

We used the values predicted by the model to calculate simultaneous linear contrasts between normal and reversed conditions, for each transition and stimuli type. We also compared differences between normal and reversed conditions across three stimuli types. Contrasts were calculated using the multcomp package, Version 1.4-8 (Hothorn, Bretz, & Westfall, 2008), and FDR correction was applied.

In addition, Bayes factors (Bayesian two-sample /-tests) were computed using the BayesFactor package, Version 0.9.12.2 (https://cran.r-project.org/package=BayesFactor') to estimate the adaptation effect (based on predicted values) in each adaptor type, and to compare it between three types of adaptors. We used Cauchy prior distribution with width = 0.707. Bayes factors, unlike p-values, can provide inference in favor of the null hypothesis (an absence of differences between conditions).

Results

Expression Categorization

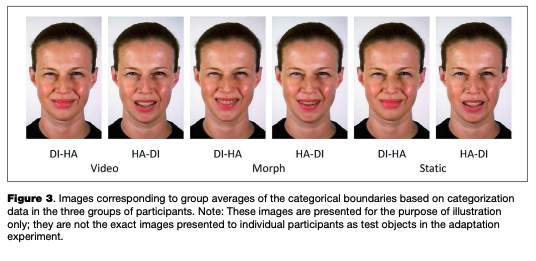

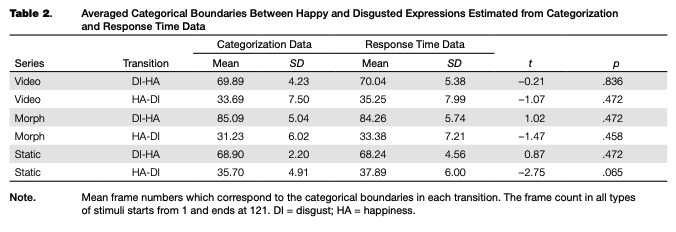

Filtering the data based on response time removed 243 trials (0.78%) out of the total amount of categorization trials (31,320) across all groups of participants, after which the individual psychometric curves were fitted. An example of the psychometric curves and RT of one representative participant from Group 3 is shown in Figure 2. Individual threshold levels were estimated from categorization and RT data and then averaged across participants in each group (see Table 2 and Figure 3). All FDR-cor- rected /-tests between the individual categorical boundaries based on the categorization and RT data were not significant (p > .05), which means that the two different estimates match each other and may be considered a relevant boundary measure.

Adaptation to Dynamic and Static Expressions

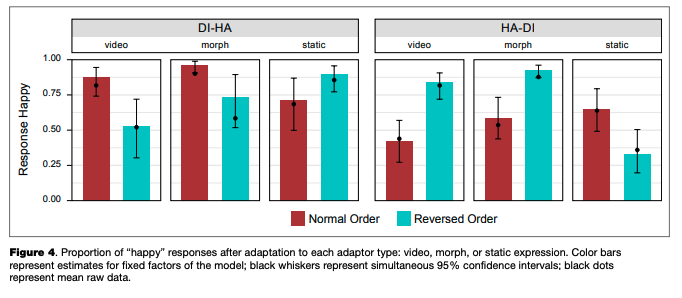

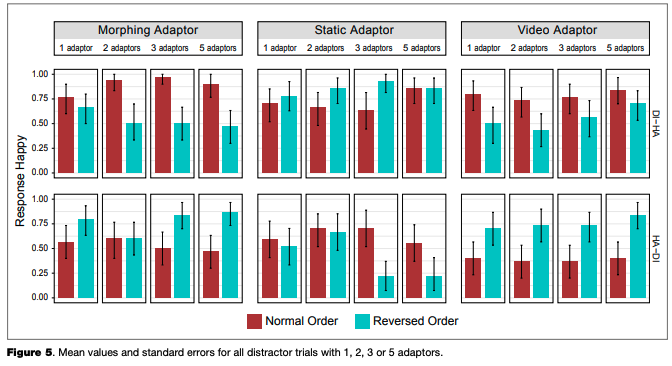

Mean values and standard errors were calculated across participants for all main trials (Figure 4), as well as for distractor trials with different numbers of adaptors (Figure 5). We did not compare the results for main and distractor trials with statistical tests, because the number of main trials was much larger, and there were only a few distractor trials with each number of adaptors.

We also did not compare the results of the adaptation experiment with the categorization task: the differences in their procedures (for example, in presentation time of the test stimuli or in the overall structure of the trial) could have influenced the results significantly. For instance, the presence of the context (adaptor) itself in the adaptation task, or its absence in the categorization task, might provide additional information about the range of possible expressions. Therefore we only make comparisons between adaptors with two different frame orders within each transition and adaptor type.

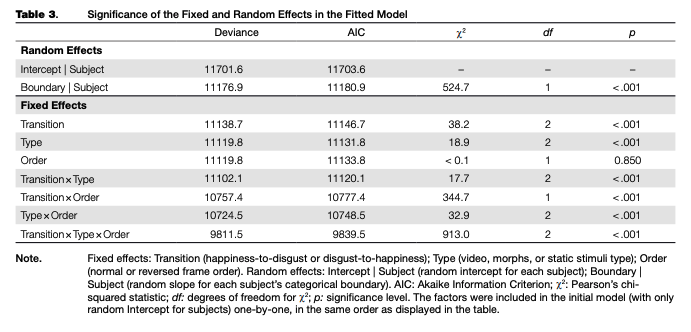

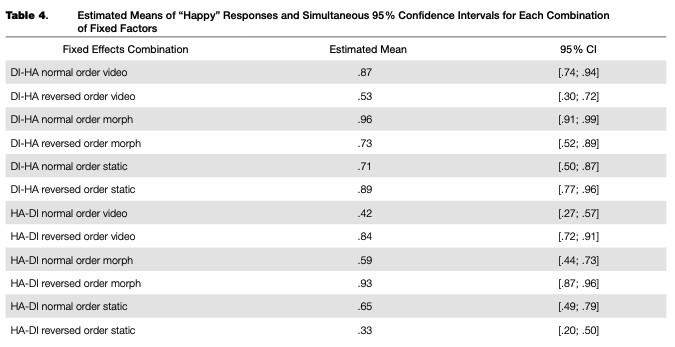

A regression model fitted to the adaptation data (main trials only) explained 70 % of the overall data dispersion (for fixed effects, K2m = .12O; for the full model, R2C = .703). The random intercept and random slope for the categorical boundary were significant, as were all fixed factors and their interactions, with the only exception being the main effect of frame order, which was not significant (see Table 3). Nevertheless, we included this factor in the final model. Based on the model fit, estimated means and simultaneous 95 % confidence intervals for each combination of levels of fixed factors were calculated (see Table 4 and Figure 4).

There was an overall bias towards “happy” responses: based on the calculated confidence intervals, its proportion after adaptation to normal-order video adaptors, the reversed-order static adaptor (static image of “disgust”), and both normal and reversed-order morphed adaptors in transition from disgust to happiness, as well as in the reversed-order morphed adaptor in the happiness-to-dis- gust transition, was significantly higher than chance (50 %). In other adaptors, the proportion of “happy” and “disgusted” responses was lower or was not significantly different from chance.

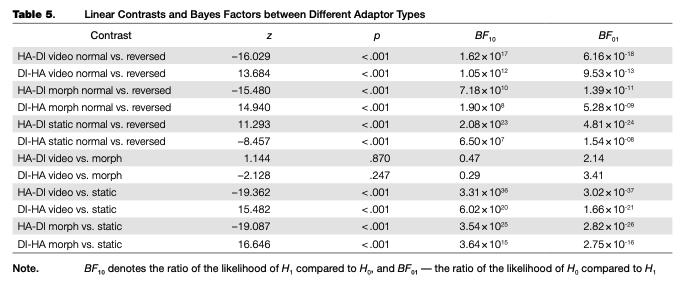

Simultaneous linear contrasts revealed that after adaptation to static happy expressions (the “normal frame order” condition in the disgusted-to-happy transition and the “reversed frame order” condition in the happy-to-disgusted transition), the likelihood of recognizing an ambiguous expression as happiness was significantly lower than after the adaptation to static expression of disgust (the “reversed frame order” condition in the disgusted-to-happy transition and the “normal frame order” condition in the happy-to-disgusted transition). That is, the classical contrastive effect of adaptation was revealed (Table 5).

On the other hand, the estimated probability of categorizing an ambiguous expression as “happy” after adapting to the disgusted-to-happy video recording with the normal frame order is significantly higher than after adapting to the same recording reversed in time. In the happy-to-disgusted video recording, the probability of “happy” responses was significantly lower after adaptation to the normally presented transition, compared to the time-reversed presentation. That is, after adaptation to video recordings the subsequently

shown static ambiguous expressions were perceived as more similar to the last-shown frame of the dynamic adaptor, compared to the same adaptor with another frame order (an “assimilative” effect with regards to the emotion on the last frame, or a “contrastive” effect with regards to the emotion on the first frame). The same results were obtained for the morphed adaptors, and the differences between video recordings and morphs were non significant. The effects of video recordings and morphed sequences were both significantly different from that of the static images.

Bayes factors were calculated for aftereffects in each type of adaptor and pairwise between the aftereffects in three types of adaptors (Table 5). Comparisons of predicted values for normal and reversed adaptors in static, video and morph conditions with Bayesian paired t-tests suggest strong evidence in favor of Hx (BFW> 10000). The differences of predicted values between normal- and reversed- adaptor conditions (the strength of the aftereffect) were calculated for each adaptor and each participant and then compared pairwise with Bayesian two-sample t-tests. For video versus static adaptors in both transitions, the Bayes factors suggest differences (ÄF10> 10000). The same result was obtained for morph versus static adaptors. The comparisons of video versus morph adaptors do not provide clear evidence in favor of any of the hypotheses.

Discussion

In a behavioral experiment with three groups of participants, we used two-altemative forced-choice task to test the influence of static images of happy and disgusted facial expressions and of realistic (recorded from an actors face) and artificial (linearly morphed) dynamic transitions between happiness and disgust on the perception of ambiguous images, perceived as 50% happy /50% disgusted. For each participant, the categorical boundary was estimated individually based on categorization and response time data for static intermediate expressions, collected prior to the main experiment. The two measures were consistent in defining the individual boundaries between categories, at which participants tended to produce slower responses and make categorical decisions about the expressions at chance level.

In the main experiment, contrary to the adaptation to static prototypical expressions, which showed a contrastive aftereffect, both realistic and morphed transitions led to assimilative effects: the ambiguous expressions were perceived and categorized more often as the same emotion as was depicted at the end of dynamic adaptor. We found no evidence in favor of or against the influence of the linear or non-linear nature of the dynamic adaptors. Therefore neither of the study hypotheses about the dynamic adaptors (contrastive or null effect of dynamic adaptors; different influence of realistic and morphed adaptors in the case of a contrastive effect) has been supported. The contrastive effect for static expressions, however, is fully consistent with a number of earlier studies (Butler et al., 2008; Cook et al., 2011; Ellamil et al., 2008; Hsu & Young, 2004; Juricevic & Webster, 2012; Leopold et al., 2005). The difference between static and dynamic adaptation aftereffects has also been shown previously (de la Rosa et al., 2013), but the sign of the aftereffect was opposite.

We did not replicate the results of a previous study that used dynamic transitions as adaptors (Korolkova, 2015). Compared to that study, we changed the procedure so that the speed of the transitions was only 20 % slower that in the real video recordings of the actress’ face. This was done in order to make the adaptors looking more natural and to make the motion more pronounced. However, the direction of a sequential effect in the present study was opposite to those found previously using the same paradigm (Curio et al., 2010; de la Rosa et al., 2013).

One possible explanation of the lack of a contrastive aftereffect to transitional dynamic expressions and of the greater perceptual similarity of the test stimuli to the ending emotion of dynamic sequences might be related to the so-called representational momentum (RM). This phenomenon occurs when, after observing a moving object (e.g., a car or a runner), a person misjudges its final position as being further along the motion trajectory. RM has also been shown for non-rigid motion. For example, in face perception, a dynamically presented linear morph from a neutral face to an emotional expression induces a positive bias, so that the emotion on the last frame is judged as more intense than actually presented (Yoshikawa & Sato, 2008). In addition to linear morphing, this effect has also been observed on naturally smiling and frowning faces (Thornton, 1997). However, for dynamic expressions of intense pain (linearly morphed stimuli), a negative RM has been observed, so that the final frame was judged as less intense than it was (Prigent, Amorim, & de Oliveira, 2018).

In the current study, the perception of fast dynamic transitions might lead to anticipation of the further strengthening of the ending emotion and to greater sensitivity to its cues in the ambiguous test stimuli. As has been shown in previous studies, RM may increase with higher motion speed, but decrease with the more pronounced expression (Yoshikawa & Sato, 2008). The RM effect reaches its maximum at around 300 ms ISI between the prime and the test stimulus (Freyd & Johnson, 1987), which equals the ISI used in our current study. The RM could also induce a temporal shift, so that the test stimuli in our experiment might be partially masked or displaced by the continuing perception of the dynamic expression. If this is the case, the main cause of masking should be face movements only, as in the static adaptor condition no displacement or forward masking was revealed.

In line with this explanation, our results might indicate different mechanisms for sequential effects in static and dynamic facial expressions, as was suggested earlier (Dube, 1997). More recent brain imaging studies and models of face processing in the brain also support the existence of two separate mechanisms, one of which is mostly related to facial form processing, while the other analyzes its motion and changeable aspects (Bernstein, Erez, Blank, & Yovel, 2018; O’Toole, Roark, & Abdi, 2002; Pitcher, Duchaine, & Walsh, 2014). Consistent with this framework, static face aftereffects (including gender, expression, identity, age, race and face distortion contrastive aftereffects) might occur as an enhanced recognition of deviations from an adapted facial shape which is mostly processed via the ventral pathway, whereas exposure to faces quickly changing their shape involves processing via the dorsal pathway. A further test of the existence of two separate processing routes for static and dynamic aftereffects might use, for example, dynamic adaptors morphed from a male to a female face, or vice versa, and testing on ambiguous male / female faces. If the underlying mechanisms do not depend of the type of changing information, one might expect similar results for changes in gender, expression and other facial characteristics. If, on the other hand, the dynamic face processing system is mostly attuned to ecologically plausible facial movements, there might be a preference for processing only realistic face changes. Further studies are necessary to test these predictions.

Another possible explanation of the results is that the dynamic information in the adaptors used in our study did not have any substantial influence on the aftereffects, but the crucial factor of adaptation was the time of exposure to a full-blown expression at the end of the last adapted transition. Based on group identification data, we calculated the intense expression presentation time from the start or end of the transition to static frames, which would be categorized as “happy” or “disgust” in more than 95 % of trials (as estimated by fitting the sygmoid curves to group data). These times for each 1210 ms-long adaptor are the following. In the disgust-to-happy transition, normal-order video / reversed- order video / normal-order morph / reversed-order morph: 400/570/200/670 ms; in the happy-to-disgust transition, normal-order video / reversed-order video / normal-order morph/reversed-order morph: 700/150/710/120 ms; in static adaptors, 1210 ms in all conditions. For conditions with lower (120-400 ms) exposure times to the full-blown expression just before the test stimulus, the adaptation led to assimilative effects. For conditions with intermediate (570-710 ms) exposure times to the full-blown expression, the proportion of responses to both emotional categories was close to 50/50. For conditions with the longest exposure time (1210 ms in static adaptors), the effect was contrastive. Note that for frames derived from the video recordings, lower exposure times corresponded to the perception of a happy expression, while intermediate exposure times corresponded to the perception of disgust. We therefore cannot separate the effect of exposure time from the effect of emotion (happy or disgusted) based on our data. This might be due to the expression production times for this particular poser only, but it is also possible that a smile (happy expression) is easier to produce in general and therefore it takes less time. In dynamic morphs, the relative duration of the perceived happy expression is still lower than that of the disgust expression. Compared to these exposure times, the ones used previously with dynamic adaptors (Korolkova, 2015) were longer, and might therefore contribute to the contrastive effect as well. The exact time of exposure to a peak expression in two other dynamic adaptation studies is difficult to estimate, based on the reported data (Curio et al., 2010; de la Rosa et al., 2013).

A systematic testing of the influence of presentation times of the dynamic adaptors might be a topic of further studies. Although for static faces, identity and expression aftereffects have been previously systematically explored for different combinations of adaptor and test presentation times, the shortest adaptation time tested in these studies was 1000 ms (Burton et al., 2016; Leopold et al., 2005; Rhodes, Jeffery, Clifford, & Leopold, 2007), which is similar to the time of each dynamic/static adaptor in our study (1210 ms). Even with one second of adaptation and 200 ms of test stimulus presentation, the contrastive effect in the above-mentioned studies had already emerged, compared to a one-second adaptation and 3200 ms stimulus presentation (Burton et al., 2016). However, when dynamic neu- tral-to-fearful adaptors lasted for 360 ms (and the expression on the last frame was not necessarily intense), the effect on the test shown for 250 ms was assimilative (Furl et al., 2010). When static expressions, either prototypical or morphed between fear and disgust, were shown for 400 ms in a pseudo-randomized sequence, a preceding expression, which was relatively far along the morphing continua, elicited contrastive aftereffects, while a preceding expression, which was relatively close in the morphing continua, led to assimilative effects (Hsu & Yang, 2013). Notably, in this study the faces shown before the immediately preceding trial (2-back, 3-back etc.) did not change the expression

categorization. The same might be also relevant to our current study, where it might be only the last adaptor that produced the aftereffect. The proportion of “happy” responses was stable across different numbers of adaptors (this was not tested statistically due to the limited number of trials with 1,2,3 or 5 adaptors; however, the patterns of responses as shown in Figure 4 are similar for any number of adaptors presented in a row), which might indicate a lack of influence of the adaptors shown prior to the one immediately preceding the test stimuli. We should note that this suggestion is only a preliminary one and should be tested in further studies, which would vary the number of adaptors.

In perceptual domains other than faces, motion aftereffects of different signs caused by short dynamic adaptor exposure have been investigated (Kanai & Verstraten, 2005), and three types of these aftereffects have been delineated: visual motion priming, or facilitation, occurring after an 80-ms presentation of the adaptor (spatial grating with sine-wave change of luminance); fast motion aftereffect, which biases perception in a contrastive manner after about 320 ms of adaptation; and “perceptual sensitization”, which requires at least several seconds of adaptation to an ambiguous moving stimulus (changing motion direction by 180° every 80 ms) to produce assimilative effects. In this study, the aftereffect changed its sign at about 160 ms of adaptor presentation followed by a 200 ms inter-stimulus interval. A further study of dynamic face aftereffects following the same design and varying adaptation times from very short (dozens of milliseconds) to rather long (several seconds) might be necessary to gain better insight into the sequential influences they have on subsequent faces.

The results of our adaptation study revealed a substantial bias towards “happy” responses in most experimental conditions. One possible reason for this effect is that a happy expression is generally perceived and recognized faster and more easily compared to other basic emotions — the so- called “happy face advantage” (Calvo & Lundqvist, 2008; Leppanen & Hietanen, 2004; Palermo & Coltheart, 2004). This has been previously observed in a variety of conditions, including briefly (50 ms) presented intense basic expressions, sandwich-masked by a neutral or scrambled face (Barabanschikov, Korolkova, & Lobodinskaya, 2015a); blurred expressions masked in the same way (Barabanschikov, Korolkova, & Lobodinskaya, 2015b); low-intensity expressions (Hess, Blairy, & Kleck, 1997); or when the faces were presented in the periphery of the visual field (Calvo, Nummenmaa, & Avero, 2010). The happy face advantage is probably based on the higher saliency of a smile — a configural change in a face as a whole that is more distinct from a neutral face or from other expressions than they are from one another (Leppanen & Hietanen, 2004). In the current study, the ambiguous (happy/disgusted) test stimuli were presented very briefly (50 ms) as well as being preceded by an adaptor, and the cues of a happy expression could have been recognized more easily compared to cues for disgust. As this effect would be the same in all types of adaptors we used, the differences in the influence of static and dynamic adaptors cannot be attributed to the advantage of a happy expression. However, future studies may benefit from using facial expressions that have comparable recognition accuracy under various conditions of presentation, such as happiness and surprise, or disgust and anger (Barabanschikov et al., 2015a).

Conclusion

The aim of our study was to explore the sequential aftereffects that might be produced by dynamic transitions between prototypical facial expressions of emotion. To achieve this, we conducted an experiment with both static and dynamic adaptors, the influence of which was tested using static test expressions. Contrary to our hypothesis and to the well-known contrastive effect of adaptation to static faces, we found an assimilative bias in the perception of ambiguous faces subsequent to the adaptors. We did not find any evidence related to the influence of qualitative characteristics of the facial movements: linearly changing adaptors or those derived from video clips of an actor. These results might indicate dissociated neural mechanisms for the adaptation to static and dynamic facial information, and the particular importance of adaptor exposure times for the sign of aftereffects.

References

Barabanschikov, V.A., Korolkova, O.A., & Lobodinskaya, E.A. (2015a). [Perception of facial expressions during masking and apparent motion]. Experimental Psychology (Russia), 8 (1), 7-27. (In Russian). Retrieved from http://psyjournals.ru/en/exp/2015/nl/75788.html

Barabanschikov, V.A., Korolkova, O.A., & Lobodinskaya, E.A. (2015b). [Recognition of blurred images of facial emotional expression in apparent movement]. Experimental Psychology (Russia), 8 (4), 5-29. (In Russian). doi:10.17759/exppsy.2015080402

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1-48. doi:10.18637/iss.v067.i01

Benton, C.P., Etchells, P.J., Porter, G., Clark, A. P., Penton- Voak, I.S., & Nikolov, S.G. (2007). Turning the other cheek: The viewpoint dependence of facial expression after-effects. Proceedings of the Royal Society B: Biological Sciences, 274(1622), 2131-2137. doi:10.1098/rspb.2007.0473

Bernstein, M., Erez,Y., Blank, L, & Yovel, G. (2018). An integrated neural framework for dynamic and static face processing. Scientific Reports, 8(1), 7036:1-10. doi:10.1038Z S41598-018-25405-9

Burton, N., Jeffery, L., Bonner, J., & Rhodes, G. (2016). The timecourse of expression aftereffects. Journal of Vision, 16 (15), 1-12. doi:10.1167/16.15.1

Butler, A., Oruc, I., Eox, G. J., 8c Barton, J.J.S. (2008).Factors contributing to the adaptation aftereffects of facial expression. Brain Research, 1191,116-126. doi:10.1016/i.brainres.2007.10.101

Calvo, M.G., & Lundqvist, D. (2008). Facial expressions of emotion (KDEF): Identification under different display-duration conditions. Behavior Research Methods, 40(1), 109-115. doi:10.3758/BRM.40.1,109

Calvo, M.G., Nummenmaa, L., & Avero, P. (2010). Recognition advantage of happy faces in extrafoveal vision: Featural and affective processing. Visual Cognition, 18(9), 1274-1297. doi: 10.1080/13506285.2010.481867

Cook, R., Matei, M., & Johnston, A. (2011). Exploring expression space: Adaptation to orthogonal and anti-expressions. Journal of Vision, 11 (4), l-9.doi:10.U67/11.4.2

Cosker, D., Krumhuber, E., & Hilton, A. (2015). Perceived emotionality of linear and non-linear AUs synthesised using a 3D dynamic morphable facial model. In Proceedings of the Facial Analysis and Animation — FAA'15 (p.7:l). New York: ACM Press, doi: 10.1145/2813852,2813859

Curio, C., Giese, M.A., Breidt, M., Kleiner, M., & Bülthoff, H.H. (2010). Recognition of dynamic facial action probed by visual adaptation. In C. Curio, H.H. Bülthoff, & M.A. Giese (Eds.), Dynamic faces: Insights from experiments and

computation (pp.47-65). Cambridge, MA: MIT Press. doi:10.7551/mitpress/9780262014533.003.0005

Dobs, K., Bülthoff, I., BreidtjM., Vuong, Q.C., Curio, C., & Schultz, J. (2014). Quantifying human sensitivity to spatio-temporal information in dynamic faces. Vision Research, 100,78-87. doi:10.1016/j.visres.2014.04.009

Dube, S.P. (1997). Visual bases for the perception of facial expressions: A look at some dynamic aspects. Unpublished doctoral dissertation, Concordia University, Montreal, Quebec, Canada.

Ellamil, M., Susskind, J.M., & Anderson,A.K. (2008). Examinations of identity invariance in facial expression adaptation. Cognitive, Affective, & Behavioral Neuroscience, 8 (3), 273-281. doi:10.3758/cabn.8.3.273

Fox, C.J., & Barton, J.J.S. (2007). What is adapted in face adaptation? The neural representations of expression in the human visual system. Brain Research, 1127(1), 80-89. doi:10.1016/i. brainres.2006.09.104

FreydjJ.J., & Johnson, J.Q. (1987). Probing the time course of representational momentum. Journal of Experimental Psychology: Learning, Memory, and Cognition, 13(2), 259-268. doi:10.1037/0278-7393.13.2,259

Furl, N., van Rijsbergen, N.J., Kiebel, S. J., Fristen, К. J., Treves, A., & Dolan, R.J. (2010). Modulation of perception and brain activity by predictable trajectories of facial expressions. Cerebral Cortex, 20(3), 694-703. doi:10.1093/cercor/bhpl40

Hess, U, Blairy, S., & Rieck, R.E. (1997). The intensity of emotional facial expressions and decoding accuracy. Journal of Nonverbal Behavior, 21(4), 241-257. doi:10.1023/A:1024952730333

Hothorn, T, Bretz, E, & Westfall, P. (2008). Simultaneous inference in general parametric models. Biometrical Journal, 50 (3), 346-363. doi:10.1002/bimj,200810425

Hsu, S.-М., & Yang, L.-X. (2013). Sequential effects in facial expression categorization. Emotion, 13(3), 573-586. doi:10.1037Z a0027285

Hsu, S.-М., & Young, A. (2004). Adaptation effects in facial expression recognition. Visual Cognition, 11(7), 871-899. doi: 10.1080/13506280444000030

Irtel, H. (2007). PXLab: The psychological experiments laboratory [online]. Version 2.1.11. Mannheim (Germany): University of Mannheim. Retrieved from http://www.pxlab.de.

Jeffery, L., Rhodes, G., McKone, E., Pellicano, E., Crookes, K., & Taylor, E. (2011). Distinguishing norm-based from exemplar-based coding of identity in children: Evidence from face identity aftereffects. Journal of Experimental Psychology: Human Perception and Performance, 37(6), 1824-1840. doi:10.1037/a0025643

Juricevic, I., & Webster, M.A. (2012). Selectivity of face aftereffects for expressions and anti-expressions. Frontiers in Psychology, 3.1-10. doi:10.3389/fpsyg.2012.00004

Kanai, R., & Verstraten, F.A.J. (2005). Perceptual manifestations of fast neural plasticity: Motion priming, rapid motion aftereffect and perceptual sensitization. Vision Research, 45 (25-26), 3109-3116. doi:10.1016/j.visres.2005.05.014

Korolkova, O.A. (2015). The role of dynamics in visual adaptation to emotional facial expressions. The Russian Journal of Cognitive Science, 2(4), 38-57. Retrieved from http://www. cogjournal.ru/eng/2/4/KorolkovaRTCS2015.html.

Korolkova, O.A. (2017). [The effect of perceptual adaptation to dynamic facial expressions]. Experimental Psychology (Russia), 10(1), 67-88. (In Russian). doi:10.17759/ exppsy.2017100106

Korolkova, O.A. (2018). The role of temporal inversion in the perception of realistic and morphed dynamic transitions between facial expressions. Vision Research, 143, 42-51. doi: 10.1016/T.VISRES.2017.10.007

Krumhuber, E.G.,&Scherer, K.R. (2016). The look of fear from the eyes varies with the dynamic sequence of facial actions. Swiss Journal of Psychology, 75(1), 5-14. doi:l 0.1024/1421-0185/ аОООІбб

Leopold, D.A., Rhodes, G., Muller, К.-M., & Jeffery, L. (2005). The dynamics of visual adaptation to faces. Proceedings of the Royal Society B: Biological Sciences, 272(1566), 897-904. doi;10.1098/rspb,2004.3022

Leppanen, J.M., & Hietanen, J.K. (2004). Positive facial expressions are recognized faster than negative facial expressions, but why? Psychological Research, 69(1-2), 22-29. doi:10.1007/ S00426-003-0157-2

Linares, D., & Löpez-Moliner, J. (2016). quickpsy: An R package to fit psychometric functions for multiple groups. The R Journal, 8(1), 122-131. Retrieved from https://journal.r-project.org/archive/2016-l/linares-na.pdf.

Matera, C., Gwinn, O. S., O’Neil, S. E, & Webster, M. A. (2016). Asymmetric neural responses for expressions, antiexpressions, and neutral faces. In Society For Neuroscience Annual Meeting. Abstract No.530.14/XX5. Retrieved from http://www.abstractsonhne.com/pp8/index.htmlLl/4071/presentation/30140

Matsumiya, K. (2013). Seeing a haptically explored face: Visual facial-expression aftereffect from haptic adaptation to a face. Psychological Science, 24(10), 2088-2098. doi:10.1177/0956797613486981

O'Toole, A. J., Roark, D. A., & Abdi, H. (2002). Recognizing moving faces: A psychological and neural synthesis. Trends in Cognitive Sciences, 6(6), 261-266. doi:10.1016/S1364-6613102)01908-3

Palermo, R., & Coltheart, M. (2004). Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behavior Research Methods, Instruments, & Computers, 36(4), 634-638. doi: 10.3758/BF03206544

Palumbo, R., Ascenzo, S.D., & Tommasi, L. (Eds.). (2017). High- level adaptation and aftereffects. Frontiers Media SA. doi: 10.3389/978-2-88945-147-0

Pell, P.J., & Richards, A. (2011). Cross-emotion facial expression aftereffects. Vision Research, 51 (17), 1889-1896. doi: 10.1016/j.visres.2011,06.017

Pitcher, D., Duchaine, B., & Walsh, V. (2014). Combined TMS and fMRI reveal dissociable cortical pathways for dynamic and static face perception. Current Biology, 24 (17), 2066-2070. doi: 10.1016/j.cub .2014.07.060

Prigent, E., Amorim, M.-A., & de Oliveira, A.M. (2018). Representational momentum in dynamic facial expressions is modulated by the level of expressed pain: Amplitude and direction effects .Attention, Perception, & Psychophysics, 80(1),82-93. doi:10.3758/sl3414-017-1422-6

Pye, A., & Bestelmeyer, P.E.G. (2015). Evidence for a supra- modal representation of emotion from cross-modal adaptation. Cognition, 134, 245-251. doi:10.1016/j. cognition.2014.11.001

R Core Team (2015). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. Retrieved from http://www.r-project.org/.

Rhodes, G., Jeffery, L.,Clifford, C.W.G.,&Leopold, D.A. (2007). The timecourse of higher-level face aftereffects. Vision Research, 47(17), 2291-2296. doi:10.1016/LVISRES.2007.05.012

Rhodes, G., & Leopold, D.A. (2011). Adaptive norm-based coding of face identity. In A. J. Calder, G. Rhodes, M.H. Johnson, & J.V Haxby (Eds.), The Oxford handbook of face perception (pp. 263-286). Oxford: Oxford University Press. doi:10.1093/ oxfordhb/9780199559053.013.0014

de la Rosa, S., Giese, M., Bülthoff, H.H., & Curio, C. (2013). The contribution of different cues of facial movement to the emotional facial expression adaptation aftereffect. Journal of Vision, 13(1), 1-15. doi:10.1167/13.1.23

Rutherford, M.D., Chattha, H.M., & Krysko, K.M. (2008). The use of aftereffects in the study of relationships among emotion categories. Journal of Experimental Psychology: Human Perception and Performance, 34(1), 27-40. doi:10.1037/0096-1523.34,l,27

Skinner, A.L., & Benton, C.P. (2010). Anti-expression aftereffects reveal prototype-referenced coding of facial expressions. Psychological Science, 21(9), 1248-1253. doi: 10.1177/0956797610380702

Skuk, V.G., & Schweinberger, S.R. (2013). Adaptation aftereffects in vocal emotion perception elicited by expressive faces and voices. PLoS ONE, 8(11), 1-13. doi:10.1371/iournal. pone.0081691

Storrs, K.R. (2015). Are high-level aftereffects perceptual? Frontiers in Psychology, 6,157:1-4. doi:10.3389/fpsyg.2015.00157

Thornton, I.M. (1997). The perception of dynamic human faces. Unpublished doctoral dissertation, University of Oregon. Retrieved from http://www.ianthornton.com/publications/pubs/Thornton 1997 Thesis.pdf.

Wang, X., Guo, X., Chen, L., Liu,Y„ Goldberg, M.E., & Xu,H. (2017). Auditory to visual cross-modal adaptation for emotion: Psychophysical and neural correlates. Cerebral Cortex, 27(2), 1337-1346. doi:10.1093/cercor/bhv321

Watson, R., Latinus, M., Noguchi, T, Garrod, O., Crabbe, E, & Belin, P. (2014). Crossmodal adaptation in right posterior superior temporal sulcus during face-voice emotional integration. Journal of Neuroscience, 34(20), 6813-6821. doi:10.1523/fNEUROSCL4478-13.2014

Yoshikawa, S., & Sato, W. (2008). Dynamic facial expressions of emotion induce representational momentum. Cognitive, Affective & Behavioral Neuroscience, 8(1), 25-31. doi:10.3758/CABN.8.1.25

Zhegallo, A.V. (2016). PXLab software as an instrument for eyetracking research using SMI eye trackers. The Russian Journal of Cognitive Science, 3(3), 43-57. (In Russian). Retrieved from http://cogiournal.Org/3/3/pdf/ZhegalloRTCS2016.pdf.