Assessment of the digital competence in Russian adolescents and parents: Digital Competence Index

Published: Dec. 2, 2014

Latest article update: Dec. 20, 2022

Abstract

In this paper, we developed a psychological model of digital competence including four components (knowledge, skills, motivation and responsibility) and four spheres (work with online content, communication, technical activity and consumption). The Digital Competence Index (DCI) is a 52-item instrument assessing an index and an entire profile of digital competence. In the Russian population study (1203 adolescents 12-17 years old and 1209 parents), acceptable reliability (.72-.90 for all of the scales, except motivation) of DCI was demonstrated. Confirmatory factor analysis supported the superiority of the four-component structure with the second-order index. Mean DCI was 34% of the maximally possible level in adolescents and 31% in parents, indicating the necessity for the educational programs in Russia. The motivation component was both the lowest and the least homogeneous factor, indicating that important special efforts to improve motivation to learn in Russian adolescents are needed.

Keywords

Russian population study, Digital Competence Index, online risks, Kids online project, digital competence

European population studies of the online risks in adolescents clearly demonstrate (Livingstone & Haddon, 2009) that high level of Internet use in modern children does not indicate that they are skilled enough to feel safe online. In the Russian part of this study (Soldatova et al., 2013), it was shown that Russian children had more frequently online risks, but neither adolescents nor their parents were well- informed and well-skilled to cope with them (Soldatova & Zotova, 2012). Therefore, the problem of online children safety in Russia is more urgent compared with Western Europe, which has a long history of development and implementation of social psychological programs aimed to improve digital literacy and to regulate online risks. Many approaches are being actively developed in Russia currently to overcome the situation (Kuz’min & Parshakova, 2013; Media and informational literacy..., 2012), but they typically do not suggest reliable assessment strategies and are not based on empirical data. The aim of the study was to develop a psychological model of digital competence and an instrument for its assessment that would be feasible, reliable and valid for evaluating not only the efficacy of the social programs but also their impacts (Prochaska et al, 2008).

Digital literacy and digital competence

The term “digital literacy” gained popularity because of a book by Pol Gilster (1997), who defined it as the capability to critically understand and use information that is received by a computer in various formats and from various sources. This definition was confirmed by Allan Martin (Martin & Madigan, 2006) as the consciousness, attitudes and capability of a person to appropriately use digital instruments as well as instruments of identification, access, management, integration, appraisal, analysis and synthesis of the digital resources both for creating new systems of knowledge and communication with others.

Further extension of this definition as a digital competence (Ilomaki et al., 2011) is explained by two main factors. First, rapid increase in the Internet use and popularity in children and adolescents changes its role as a specific activity to the role of the “whole world”, with the opportunities and activities that are as diverse as in the “offline” world. Under these conditions, analysis of the critical relationship to the information should include not only knowledge and skills but also motivation, values, and online activity type. Second, the list of social relationships and roles that could be maintained using the Internet became much wider than “user” and “programmer”. In this context, some authors suggest analyzing the Internet as a place of special culture and “digital citizenship” (Mossberger et al., 2008), emphasizing the importance of understanding social relationships on the Internet. Based on these ideas, we considered digital competence as a part of social competence (Asmolov & Soldatova, 2006) that should be analyzed through understanding knowledge, skills, beliefs, motivation and behavior on the Internet.

Psychological model of digital competence

We define digital competence as a personal capability and readiness to make confident, effective, critical and safe choices and the implementation of the info- communication technologies in various domains (informational environment, communication, consumption and techno-sphere) that is based on continuous learning competencies (system of knowledge, skills, motivation and responsibility). In other words, digital competence is not only the amount of general user and professional knowledge and skills, which are presented in various models of ICT competence and information competence, but also the emphasis on the effective activity and personal relationship to this activity based on a sense of responsibility.

Consideration of responsibility as a component of digital competence requires an understanding of the rights and duties of the “digital” citizen, as well as rules of behavior in the digital world. Issues related to responsibility are also related to the problem of safety of modern information and communication technologies for children and adolescents. This safety includes not only the situation if adults provide technical safety to himself and his child but also situations if users contact special services meeting possible online risks, understand what is and what is not appropriate in the process of online communications (regardless of the degree of anonymity), and feel that people in the Internet should be as careful as in their offline life. Digital competence should include the knowledge and skills that enable adults and children to use the Internet safely and critically. Effective use of all of the opportunities of ICT for learning and self-education is possible only in conjunction with the intention to minimize the risks that new technologies may have.

Globalism and inclusivity as key features of the Internet do not only determine its spread into various spheres of human life but also contribute to how digital competence is displayed in various areas and activities. We distinguish four spheres of human activity, in which tremendous opportunities and risks of the Internet are fully demonstrated (see Table 1). They are the information (content) sphere (creation, search, selection, critical evaluation of the content), sphere of communication (creation, development, maintenance of relationships, identity, reputation, and the processes of self-presentation), sphere of consumption (use of the Internet for consumer purposes - orders, services, shopping, etc.) and techno-sphere (computer and software-related skills including skills that are necessary to provide technical safety). Accordingly, there are four types of digital competence:

- Information and media competence - knowledge, skills, motivation and responsibility associated with search, understanding, organizing, archiving of digital information and its critical evaluation, as well as with the creation of materials based on digital technologies (texts, images, audio and video);

- Communicative competence - knowledge, skills, motivation and responsibility required for online communication in various forms (email, chats, blogs, forums, social networks, etc.) with various purposes;

- Technical competence - knowledge, skills, motivation and responsibility allowing use of a computer and appropriate software effectively and safely for solving various problems, including the use of computer networks;

- Consumption competence - knowledge, skills, motivation and responsibility to solve (through computer and the Internet) a variety of routine tasks associated with specific life situations that involve various needs’ satisfaction.

The phenomenon of digital competence and its competencies and components are associated with motivation and responsibility. The motivational component involves the formation of a meaningful intention to develop and achieve digital competence as a basis of an adequate digital activity that is complementary to other human activity in modern times. The responsibility component includes, in addition to motivation, the competencies to provide security online: skills of security provision if using the Internet (1) as a source of information, (2) in online communications, (3) in solving various problems associated with the consumption, and (4) ensuring technical safety for all of these actions (Table 1).

Table 1. Digital Competence Index: spheres and components

Digital Competence Index | |

Components | Spheres in which components are implemented |

Knowledge | Content, communication, technical aspects, consumption |

Skills | Content, communication, technical aspects, consumption |

Motivation | Content, communication, technical aspects, consumption |

Responsibility and safety | Content, communication, technical aspects, consumption |

All of these components of digital competence can be implemented in various ways in each of these four areas. Use of the Internet for communicating includes searching, downloading and creating content; solving technical problems; and purchasing and payments, which are different possibilities; consequently, they demand successful implementation of various resources and competencies. Metaphorically speaking, both “retardation” in the digital development and the “digital genius” can be either general (including many areas) or partial (only in some areas). Therefore, in the investigation of digital literacy, it is important to study both its components and the spheres in which each of the components may have specific development and implementation.

Operationalization and screening of digital competence

Currently, there are many organizations around the world that offer courses of digital literacy and assess and issue certificates of digital literacy (e.g.. Global Digital Literacy Council1, Council of European Professional Informatics Societies2, Microsoft3, Digital Literacy Вest Practices4). Development of a new index of digital com- petence requires the systematization of these approaches and the discussion of the reasons why the existing model and/or indicators seem to be insufficient for the requirements and theoretical approaches discussed above.

The starting point for the development of all social indices (Sirgy et al, 2006) is the recognition that the objective economic indices (for instance, use of gross domestic product to describe well-being in the country or use of the availability of Internet access to assess digital competence) are insufficient to assess the social processes behind one or other phenomena.

The first attempts to overcome these difficulties usually involve a shift to use completely subjective indicators (usually with one-item approach; for example, people are asked to rate their well-being on a scale). In the field of information technology, an example of this approach is the COQS index, which was developed in a project by the Statistical Indicators Benchmarking the Information Society (SIBIS). In this index, people are asked to appraise themselves on four scales corresponding to the following groups of skills: how well they communicate with others online (Communicating), obtaining (or downloading) and installing software on a computer (Obtaining), questioning the source of information on the Internet (Questioning) and searching for the required information using search engines (Searching). Despite the ease of use, these methods are unreliable and oversimplify the picture. Particularly, COQS includes only a few examples of skills, without considering knowledge, motivation, responsibility and other activities on the Internet.

The next step in the development of approaches to digital competence diagnostics includes creating indexes based on a series of indicators. Indicators are selected based on one of two variants. The first way is to select a large number of indicators and in some cases, their subsequent narrowing by some statistical criterion (e.g., weights in regression equation to predict an outcome variable or factorial analysis of the scales structure). In the second approach to the creation of indices, a theoretical model should be initially proposed. Items are selected according to this model (to describe it as completely as possible). After collecting empirical data, the structure of the index can be specified for better compliance with its model. Advantage of this latter variant is that the final instrument is clear and logical, as well as economically more efficient. Below we consider these two variants in detail.

Selection of indicators based on the regression equation is optimal if the aim is prediction, but a limitation is that it is not always possible to determine the “gold standard” that we would like to predict (and that is possible to appraise). Particularly, this issue applies to digital competence, for which also there is no “gold standard measurement”, which would be used as a reference point in creating its index.

The idea of identifying the factor structure of the instrument and further selection of items (indicators) that had maximum loadings on these factors has been implemented in the ICT development index (IDI, Measuring the information society, 2012). The index consists of 11 indicators, selected based on expert appraisals and grouped into the following three scales: access to information and communication technologies (having computers, Internet access, mobile network coverage, etc.), use of information and communication technologies (percentage of users) and skills (literacy). The weight of each of the components in the general index was determined based on factor analysis (principal component method). However, the problem with this approach is even greater than the previous approach; factor structure and loadings depend on what indicators were selected as the initial set, their quantity and how they relate to each other. As a result, the final scale of the index may not reflect reality but instead the preferences of the researchers.

A more individual approach is provided using indexes evaluating the skills of users. Typically, skills are selected by experts in way that maximizes the coverage of an area. An example is the Microsoft Computing Safety Index5, which aims to assess the skills of people to ensure their own safety on the Internet (use of new operating systems, antivirus, automatic updates, etc.). However, most of these indices are limited to a specific area: the safety of children online, search skills and communication skills training or computer security skills, which does not allow for general screening of digital competence.

Thus, there have been many insights and findings in previous studies of digital competence with respect to its components as skills and, to a lesser extent, knowledge. The studies have primarily focused on the activities with online content (e.g., information search) and technical skills. Most of these initiatives were aimed at training a specific group of people (students, professionals, etc.) and did not consider the issues of motivation, responsibility and personal relationship to the Internet.

The first aim of this study was to develop a screening instrument for general assessment of digital competence (index) as well as a profile including various components and spheres of online activity. The second aim of the study was to validate the index on the representative Russian sample of adolescents and parents. In this paper, we describe a part of the validation study including appraisal of reliability and factor validity of the index.

Method

The study was conducted by the Foundation for Internet Development and Lomonosov’s Moscow State University, Psychology Department with the support of Google. Interviews were completed by the Analytical Center of Yuri Levada using multilevel stratified representative samples of adolescents aged 12-17 years old and parents with children of the same age living in Russian cities with 100 000 habitants or more. Fifty-eight cities from the 45 regions of all eight Federal Districts were randomly selected. Samples were created proportionally to the population size in the cities.

Participants. Participants in the study included 1203 adolescents 12-17 years old (50% females; 12-13 years old — 2%; 14-15 years old — 35%; 16-17 years old — 33%) and 1209 parents (69% females; 28-39 years old — 47%; 40-49 years old — 45%; more than 50 years old — 8%) with children within this age range. Most children were in middle or high school (89%), and the others were students either in college (9%) or at a university (2%). Thirteen percent of parents had a basic education, 40% had a professional education, and 47% had a university education.

Methods. The Digital Competence Index (DCI) was developed by a group of psychologists in collaboration with Google based on the psychological model of digital competence. DCI is a 56-item screening instrument, including four scales, that appraises knowledge (10 items), skills (25 items), motivation (10 items) and responsibility (11 items) online in each of the four spheres of online activity (working with the content, communication, technical activity and consumption). Participants were asked to choose from the list (as many options as they wish) what they know, can do well or excellently and would like to learn about the Internet (see Table 2). The profile of digital competence included 16 subscales.

Table 2. Examples of DCI items

Digital competence component | Instruction for adolescents | Sphere of online activity | Item examples |

Knowledge | Please choose from this list all of the things you know rather well. It means that you can say you have generally enough knowledge about it. | Content | Various search engines on the Internet (to search information, music, photo, video, etc.). |

Skills | Please choose from this list all of the things you have already done in the Internet and can say that you are skilled enough to do. | Communication | To interact with the participants of the various Internet-communities (Twitter, forum, wiki, etc.). |

Motivation | Please choose from this list all of the things about that you would like not only to learn more but to learn how to use them successfully. | Technicalactivity | Possibilities to establish updates on software settings on the device I use to go on the Internet. |

Responsibility | Please choose from this list all of the things you are able to do on the Internet. | Consumption | To determine the degree of privacy and security of personal data transfer using services via the Internet. |

For each subscale, the final score was processed as a percentage of the answers chosen. The general index is a mean sum of the four components of digital competence. Therefore, all of the components were presented in the index in equal proportion. This approach to the data scores was chosen to make an index easy for use by non-specialists (e.g., face validity).

To achieve feasibility of the index, a pilot study including 20 adolescents and 20 parents was held. All of the participants were interviewed about any difficulties and suggestions they had regarding the questionnaire. Any unclear and ambiguous items and instructions were reformulated.

Results

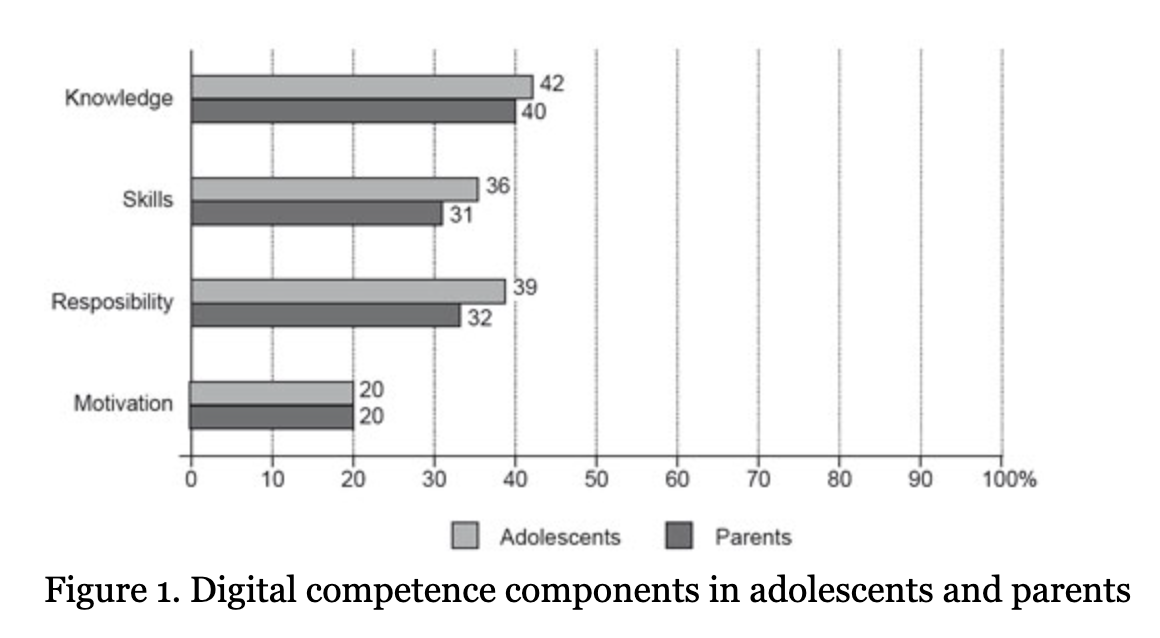

The mean digital competence was 34±16% in the adolescent sample and 31±18% in the parent sample, demonstrating that participants' digital literacy was just one- third of possible maximum. The most prominent component was knowledge, and the least prominent component was motivation (Fig. 1). Both parents and adolescents were more competent in the sphere of working with the content online (38% and 46%, respectively) and least competent in the sphere of consumption (27% and 18%, respectively).

As shown in Table 3, the Cronbachs alphas varied from medium to high (.72-.90) for each digital competence component, except motivation. Considering that items in the motivation scale are the same as in the knowledge scale, this low reliability could not be explained by poor psychometric quality of the scale. We suggest that in the contemporary Russian context, both adolescents and parents typically have learnt their online knowledge and skills spontaneously and have poor understanding of what to learn about the Internet. With the development of motivation, it should become not only higher but also more consistent.

Confirmatory factor analysis was used to appraise factor validity of the construct (Brown, 2006). We compared characteristics of the three nested models. Model 1 included four components (knowledge, skills, motivation and responsibility) and second-order general index. Model 2 consisted of four independent components. In model 3, all of the items loaded on the general index without components. Both the adolescents and parents in model 1 (CFI = .77, RMSEA = .04 for adolescents and CFI = .81, RMSEA = .05 for parents) were superior compared with models 2 (∆χ2 = 1415, ∆df=5, p<.001 and ∆χ2 = 1779, ∆df=5, p<.001, respectively) and 3 (∆χ2 = 945, ∆df = 5, p < .001 and ∆χ2 = 775, ∆df = 5, p < .001, respectively).

Table 3. Reliability of the digital competence components in adolescents and parents

Digital competence components | Cronbach’s alpha | |

Adolescents | Parents | |

Knowledge | .79 | .72 |

Skills | .90 | .86 |

Motivation | .53 | .46 |

Responsibility | .81 | .79 |

Discussion

The Digital Competence Index is a screening instrument that can be used to assess general online competence as well as its profile in adolescents and adults. Except for the motivation scale, all of the scales were found to be reliable. We hypothesize that the low consistency of the motivation scale is due to the poor development and implementation of the goals to learn on the Internet, which is typical in the contemporary Russian context. Confirmatory factor analysis supported the four-component factor structure with the second-order index both in the adolescents and adults. Theoretically, the major advantage of DCI is that it explicitly considers motivation and responsibility (that are central for the digital “citizenship”, e.g., Mossberger et al, 2008) and it builds a profile of competence in various spheres and domains.

Further research should concentrate on three points. First, construct validity (e.g., comparing to the tasks of online activity) and responsiveness to changes in DCI should be further explored. Second, the components of motivation and responsibility should be studied and elaborated. In the DCI, the responsibility scale describes mainly online safety skills, whereas the motivation scale appraises readiness to learn general skills and knowledge about the Internet. However, both personal motivation and responsibility for the online activity include problems in the personal relationship to the Internet, emotions experienced with the Internet, coping skills, etc. Third, the low level of the digital competence in Russia (especially motivational component) indicates the necessity of educational programs aimed to improve not only knowledge and skills online but also to develop self-regulation in the choice and implementation of the goals on the Internet (motivational component) as well as personal responsible relationship to online events (responsibility component).

Acknowledgments

The study was supported by the Russian Foundation for Humanities, project 14- 06-00646a.

References

- Asmolov, A., & Soldatova, G. (Eds.) (2006). Sotsial’naya kompetentnost’ klassnogo rukovoditelya: rezhissura sovmestnykh deistvii [Social competence of the teacher: direction of cooperation]. Moscow: Smysl.

- Brown, T. (2006). Confirmatory factor analysis for applied research. New York London: Guilford Press.

- Gilster, P. (1997). Digital Literacy. NewYork: Wiley, Computer Publishing.

- Ilomaki, L., Lakkala, M., & Kantosalo, A. (2011). What is digital competence? Linked portal. Brussels, European Schoolnet (EUN), 1-12.

- Kuz’min, E., & Parshakova, A. (Eds.) (2013). Media- i informatsionnaya gramotnost’ v obshchest- vakhznanii [Media- and information literacy in social sciences]. Moscow: MTsBS.

- Livingstone, S., & Haddon, L. (2009). EUKids Online: final report. LSE, London: EU Kids Online. Retrieved from: http://www.lse.ac.uk/media@lse/research/EUKidsOnline/EU%20Kids%20 I%20(2006-9)/EU%20Kids%20Online%20I%20Reports/EUKidsOnlineFinalReport.pdf

- Martin, A., & Madigan, D. (Eds.) (2006). Digital literacies for learning. London: Facet.

- Mediinaya i informatsionnaya gramotnost’: programma obucheniya pedagogov. [Media- and information literacy: program for teachers] (2012). Moscow: Institut YuNESKO po informatsionnym tekhnologiyam v obrazovanii.

- Measuring the information society. (2012). Geneva: International Telecommunication Union. Retrieved from: http://www.itu.int/ITU-D/ict/publications/idi/material/2012/MIS2012_ without_Annex_4.pdf.

- Mossberger, K., Tolbert, C., & McNeal, R. (2008). Digital citizenship: The internet, society, and participation. Cambridge, MA: MIT Press.

- Prochaska, J., Wright, J., & Velicer, W. (2008). Evaluating theories of health behavior change: a hierarchy of criteria applied to the transtheoretical model. Applied Psychology, 57(4), 561- 588. doi: 10.1111/j. 1464-0597.2008.00345.x

- Sirgy, M. J., Michalos, A. C., Ferris, A. L., Easterlin, R. A., Patrick, D., & Pavot W. (2006). The quality of life (QOL) research movement: past, present and future. Social Indicators Research, 76, 343-466. doi: 10.1007/sl 1205-005-2877-8

- Soldatova, G., Rasskazova, E., Zotova, E., Lebesheva, M., Geer, M., & Roggendorf, P. (2013). Russian Kids Online Key findings of the EU Kids Online II survey in Russia. Moscow: Foundation for Internet Development. Retrieved from: http://www.lse.ac.Uk/media@lse/research/EUKidsOnline/ParticipatingCountries/PDFs/RU-RussianReport.pdf.

- Soldatova, G., & Zotova, E. (2013). Coping with online risks: The experience of Russian school- children. Journal of Children and Media, 7(1), 44-59. doi: 10.1080/17482798.2012.739766