Effect of Scientific Argumentation on the Development of Scientific Process Skills in the Context of Teaching Chemistry

Published: Jan. 10, 2015

Latest article update: Jan. 12, 2023

Abstract

This study was conducted in order to determine the differences in integrated scientific process skills (designing experiments, forming data tables, drawing graphs, graph interpretation, determining the variables and hypothesizing, changing and controlling variables) of students (n = 17) who were taught with an approach based on scientific argumentation and of students (n = 17) who were taught with a traditional teaching approach in Grade 11 chemistry. The study was conducted at a high school in Çankırı, Turkey. A multiformat Scientific Process Skills Scale was administered to both groups as a pre- and posttest; it contained 29 items in 5 modules and consisted of limited and unlimited open-ended, multiple-choice, and paper-pencil performance assessment questions. Repeated t-test and analysis of variance (MANCOVA) were applied to analyze the data. It was found that the integrated scientific process skills of students in both groups improved significantly except skills of “forming a data table” and “graphic interpretation skills” for group. MANCOVA results revealed that there was a statistically significant difference between the groups on the combination of 5 dependent variables. The teaching approach had a significant effect on integrated scientific process skills except for the designing experiments skills. In sum, the scientific argumentation-based teaching approach was more effective in acquiring science process skills than the traditional teaching approach.

Keywords

Secondary education., Scientific argumentation, chemistry education, scientific process skills

INTRODUCTION

With rapidly and daily increasing scientific knowledge accumulation, scientific literacy is becoming more important for every individual. The purpose of scientific literacy is not to make all individuals experts on science and technology; rather, its purpose is to make people who have studied compulsory basic science individuals who can keep pace with the world they live in, understand die facts and events they face, think rationally, and have confidence in themselves in today’s information age (Laugksch & Spargo, 1996).

When students gain the habits of mind that comprise experience and scientific literacy through inquiries, problem solving and critical thinking, they also learn how to apply basic scientific concepts to everyday situations (Lee & hr add, 1996). In high school and university when advanced science is studied, few individuals can learn the scientific method (of thinking) because science is taught as a discipline based on facts rather than through a discovery method of learning. In today’s information age, the basic aims of science education in terms of both students’ specific content and specific process are: make them acquire the attainment methods using skills of knowledge; develop understanding on social and scientific issues; and have control over scientific facts, conceptions, and principles rather than transferring present knowledge to them (Schafersman, 1991; Van Driel, Beijard, & Verloop, 2001; Zachos, Hick, Doane, & Sargent, 2000). This can only come about through higher cognitive process skills containing scientific process skills, scientific method, scientific thinking, and critical drinking forms. The scientific method, scientific thinking, and critical drinking have been terms used at various times to describe these scientific skills. Today the term scientific process skills is commonly used (Padilla,1990).

Scientific process skills, the basis of scientific literacy, are basic skills that make learning science easier, encourage student participation, improve the feeling of responsibility in learning, increase permanence in learning, make understanding the acquisition of knowledge drat drey apply in everyday life problems easier, and prepare students for their future lives (Schafersman, 1991). That these skills are effective in science learning is emphasized in numerous studies (e.g., Chang & Weng, 2000; Flores, 2000; Haden, 1999; Osdund, 1998; Turpin & Cage, 2004).

Viren classification for scientific process skills are examined, it can be seen that these skills are handled in two stages, namely, basic and integrated (Rezba et al., 1995). Basic process skills are: observation, inference and classification, measurement, estimation, using numbers, interaction and using time-space relations. Integrated scientific process skills are well rounded, based upon basic process, and require a higher cognitive level. Normally, each process is made up of the combination of two or more basic processes. During dais process, also called experimental process, variables are determined, a hypothesis is built up, data are accessed to back up or rebut that hypothesis, data are recorded, and finally a decision is reached. During dais process, students seek to answer the question How can I discover? (Harlen, 1999; Rezba et al., 1995).

Numerous research projects (Ergiil et al., 2011; Karamustafaoglu, 2011; Kocakulah & Sava$, 2013; Serin, 2009) focused on the teaching and acquisition of basic and integrated science process skills found that students’ scientific process skills improve as they use them. Teachers cannot expect mastery of experimenting skills after only a few practice sessions. If science educators give students multiple opportunities to use their process skills, encourage them for critical opinions in different content areas and contexts, then they will support meaningful learning (Padilla, 1990). To achieve dais, an activity based on scientific skills, which determine the basic elements of scientific methodology, will guarantee the improvement of knowledge that is based on both scientific concepts and factual concepts (Ebenezer & Haggerty, 1999).

Teaming science is not only students obtaining knowledge about their environment but also grasping the scientific way of learning, suggesting claims about a problem, persuading the other party, and being able to argue as to defend the claims they have joined through scientific research to make them clear (Skoumios, 2009). Hence, science generally improves not through agreements but through objections, conflicts, and arguments. Also, since understanding the relation between a claim and evidence is understanding a claim and the truth, it helps develop scientific skills (Drduran, Simon, & Osborne, 2004). It seems reasonable to conclude that students learn the basic skills better if they are considered an important object of instruction and if proven teaching methods are used (Ebenezer & Haggerty, 1999). Learners could acquire scientific process skills by teachers creating constructivist learning environments and employing instructional strategies in science education (Onal, 2005, 2008; Scherz, Bialer, & Eylon, 2005). In scientific process, it is necessary to determine more than one point of view and idea, develop proper solutions, and back these solutions up with data and evidence. If we want students to understand scientific process and applications, we should add arguments, conflicts, and heuristic rules based on scientists’ interpretations to the results of experimental details rather than only pronouncing them (Nussbaum & Sinatra, 2003). At this point, scientific argumentation has emerged as an important technique (e.g., Arcidiacono & Kohler, 2010; Bricker & Bell, 2008; Cross, Taasoobshirazi, Hendrick, & Hickey, 2008; Erduran, Ardag, & Yakmaci-Guzel, 2006; von Aufschnaiter, Erduran, Osborne, & Simon, 2008). Additionally, since scientific argumentation is a process of using evidence and defending a point of view, it is related to scientific process skills.

Numerous studies have been conducted based on the belief that “learning through arguments is learning how to think’’; processes such as obtaining scientific knowledge, explaining, modelling, and hypothesizing (Driver, Newton, & Osborne, 2000), assessing alternatives and evidence, interpreting texts and assessing the viability of scientific claims, and developing mental activities are considered as basic components in conducting scientific arguments. Therefore, argumentation is an essential component in doing science and communication through scientific claims (Cross et al., 2008; Erduran et al., 2004, 2006; Sampson & Clark, 2011).

Argumentation has cognitive values in science education; for example, when considering cognitive perspective, argumentation contains the reasoning of individuals and in classes where arguments take place and students express their thoughts. Expressing thoughts in this way helps students change direction from their inner psychological area (mind) and rhetorical arguments to their outer psychological area (classroom) and dialogic arguments. In science education, practicing the teaching approach that provides a student-centered learning environment ensures students’ cognitive skills as well as skills of reasoning and examination; and it supports scientific process skills, reasoning and questioning skills, and improves scientific epistemology (Lemke, 1990; von Aufschnaiter et al., 2008), science literacy, conceptual understanding, and research skills (Simon, Erduran, & Osborne, 2006; von Aufschnaiter et al., 2008).

Recently, science education has paid attention to studies about the importance of argumentation in practice, the evaluation of scientific education, and obtaining scientific knowledge (Driver et al., 2000; Erduran et al., 2004; Sampson & Gleim, 2009). Moreover, it is observed that most of the research conducted is about how students learn scientific concepts and how they can learn them better (Aydeniz, Pabuccu, Qetin, & Kaya, 2012; Kaya, 2013; Kaya, Erduran, & Qetin, 2012); it is not about the effectiveness of the scientific argumentation method — in which teaching methods such as problem solving, critical thinking and scientific thinking skills are used — about required skills, knowledge, and attitude development needed for students to become scientific literates directly on science (or chemistry) subjects.

In order to improve their scientific understanding, students need to use arguments in structured activities and practice about this. Activities that will help students overcome the gap between knowing what is true technically and having the adequate understanding and ability to say the right things in the right time and right place are needed (Osborne, 2002). These activities can be applied easily to the learning environment in which an argumentation-based teaching approach is used. In argumentation-based learning media, there are activities that help students learn science using claims; for example, expression table, estimation-observation-explanation, designing experiments, forming arguments, theories in race with caricatures, theories in race with opinions and evidence, concept maps (Osborne, Erduran, & Simon, 2004a). In this study, in the treatment group in which a scientific argumentation - based teaching method was applied, all these activities were exercised other than concept maps. The effects of integrated scientific skills of the instructional approach on skills such as determining variables of an experiment, changing variables, designing an experiment, drawing and interpreting graphs, drawing tables, hypothesizing directed toward the solution of a problem, and determining dependent, independent, and controlled variables according to the hypothesis were investigated. The following research questions were investigated:

- Is there a significant difference between pretest and posttest scores of the control and treatment groups?

- Is there a significant difference between control and treatment group students in terms of integrated scientific process skills?

METHOD

The purpose of the study was to explore the impact of argumentation-based classroom activities on high school students’ integrated scientific process skills compared with the scientific process skills of students taught through a traditional teaching approach. Targets aimed at scientific process skills that the students were intended to gain were designed under headings like before running experiment, experimental stage, and after experiment in a way in which the acquisitions of the Grade 11 Chemistry Teaching Program (2008) were included (see Giiltepe, 2010). Practices applied in the treatment and control groups included the Reaction Rate, Chemical Equilibrium, Solubility Equilibrium, and Acids and Bases Equilibrium units of the Grade 11 curriculum. Since the acquisition of scientific process skills would take time, the research lasted for the two terms of the educational year.

Design

The study was conducted in an urban public science high school that was selected because of its suitability in Qankin, Turkey, during 2008-09. There were four Grade 11 classes in the school. Students who participated in the study had been selected by a central examination who were accomplished at Matias and Science. Students were assigned to their classes by school management homogeneously according to their exam results. Since threir exam points were more or less the same, the success rates of the classes were similar. Two of the four classes were chosen at random for this research by the school principal and one was assigned as the control group; the other served as the treatment group. The chemistry lessons were conducted with treatment group through a teaching approach based on scientific argumentation while the control group was taught by a traditional teaching approach. There were 17 students (7 girls, 10 boys) in the treatment group and 17 students (8 girls, 9 boys) in the control group. The ages of participants ranged between 16 and 17 years. All students were taught by the same chemistry teacher.The study was conducted over a 29-week period on the following topics: reaction rate for 7 weeks, chemical equilibrium for 10 weeks, solubility product for 5 weeks, and acids and bases for 7 weeks. To understand equilibrium in aqeous solutions (acids and bases, solubility equilibrium) better, dynamic equilibrium in reactions should be understood better .Dynamic equilibrium is related with the reaction rate which is based on collision theory( Kaya, 2013; Pekmez 2010). Therefore these concepts follow and build onto each other. These subjects which are a continuation of each other are abstract so they are difficult to understand for student and they mainly require the usage of scientific process skills. In die earlier studies conducted about these subjects researchers investigated students’ misconceptions and die effect of instructional approach on students’ conceptual understanding (Atasoy, Akkug, & Kadayifgi, 2009; Co^tu & Unal, 2004; Qakmakgi & Leach, 2005; Qelik & Kilig, 2014; Demircioglu & Yadigaroglu, 2011; Giiltepe & Kihg, 2013; Kaya, 2013; Ozmen et al., 2012). In some of them it was stated that students had difficulty in stages of understanding graphs and designing an experiment (Co^tu & Unal, 2004; Demircioglu & Yadigaroglu, 2011; Kaya, 2013; Ozmen et al, 2012). On the other hand the acquisition of scientific process skills reqiures a long time (Kihg, Yildinm, & Metin, 2010). Therefore, we conducted our research through these subjects which compose grade 11 chemistry curriculum.

At the high school where die study was conducted, students studied Chemistry 4 hours weekly. Chemistry classes of control and treatment groups were taught in accordance with Grade 11 Chemistry Teaching Program (2008) which involved basic acquisitions such as learning and associating new concepts, improving scientific process skills in die envisaged time. Classes in two groups were operated with the same examples and experiments.

In die control group, die teacher (researcher) lectured by a classic course plan that she had prepared according to die way chemistry courses were conducted in the high school the study was carried out. Course books, supplementary materials, and computer animations were used as course materials. The lessons usually started with die teacher’s questions and the students’ answers and then moved on with her narration. Sometimes, however, classes turned into unconfigured classroom discussions in which qualifiers, backings, and rebuttals were used. When this happened, die teacher allowed the lesson to continue that way without any interference. After the necessary concepts about the subject had been explained, exercises were done and problems were solved about it.

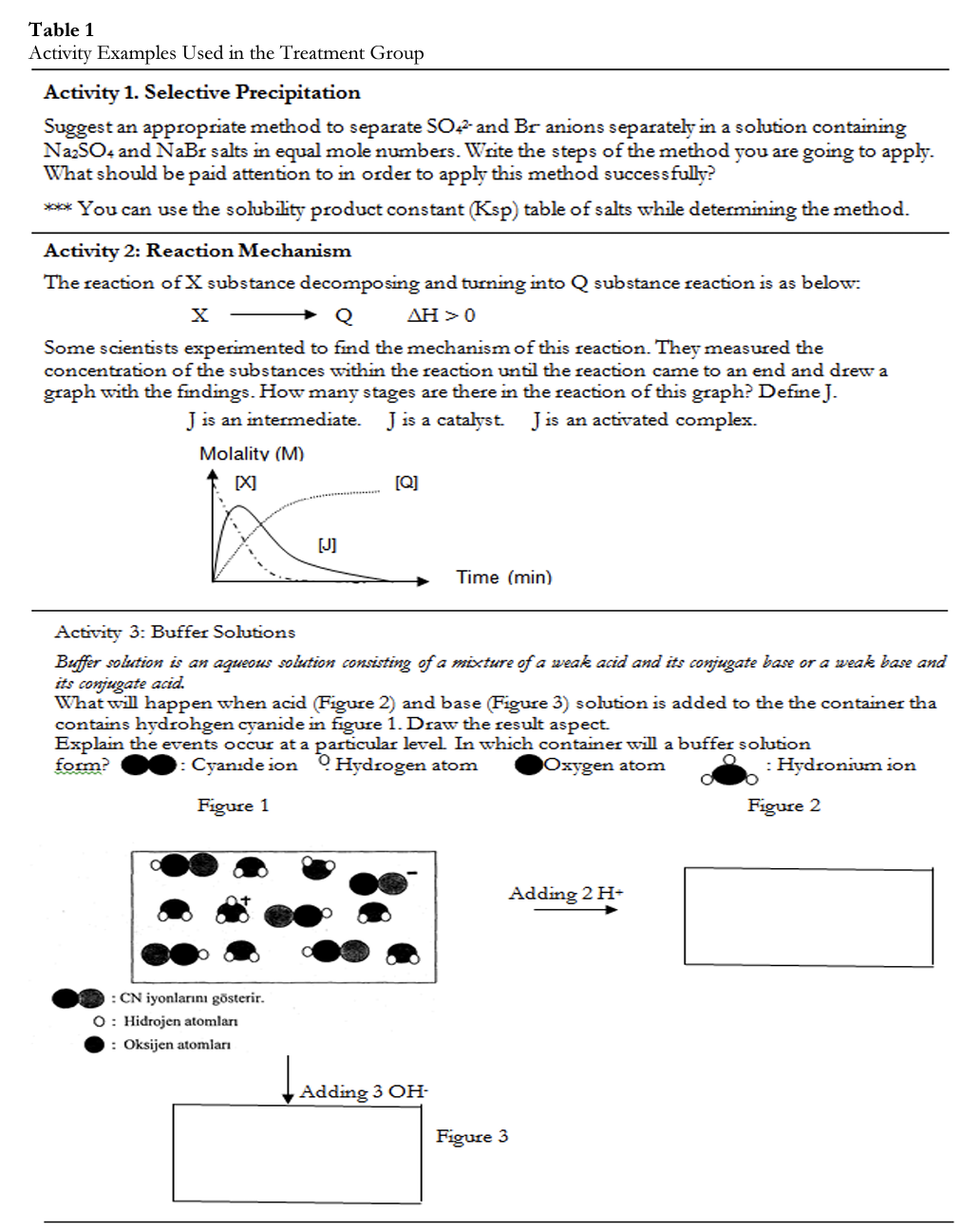

For example, at die beginning of die lesson about buffer solution; first of all, the control group students were asked about what would have happened if strong acid or base had been added into a hydrogen cyanide solution and were also asked to interpret die incident in terms of particular size. A conclusion was drawn evaluating die given answers. Questions about die subject were solved and die participation of students was paid special attention to.

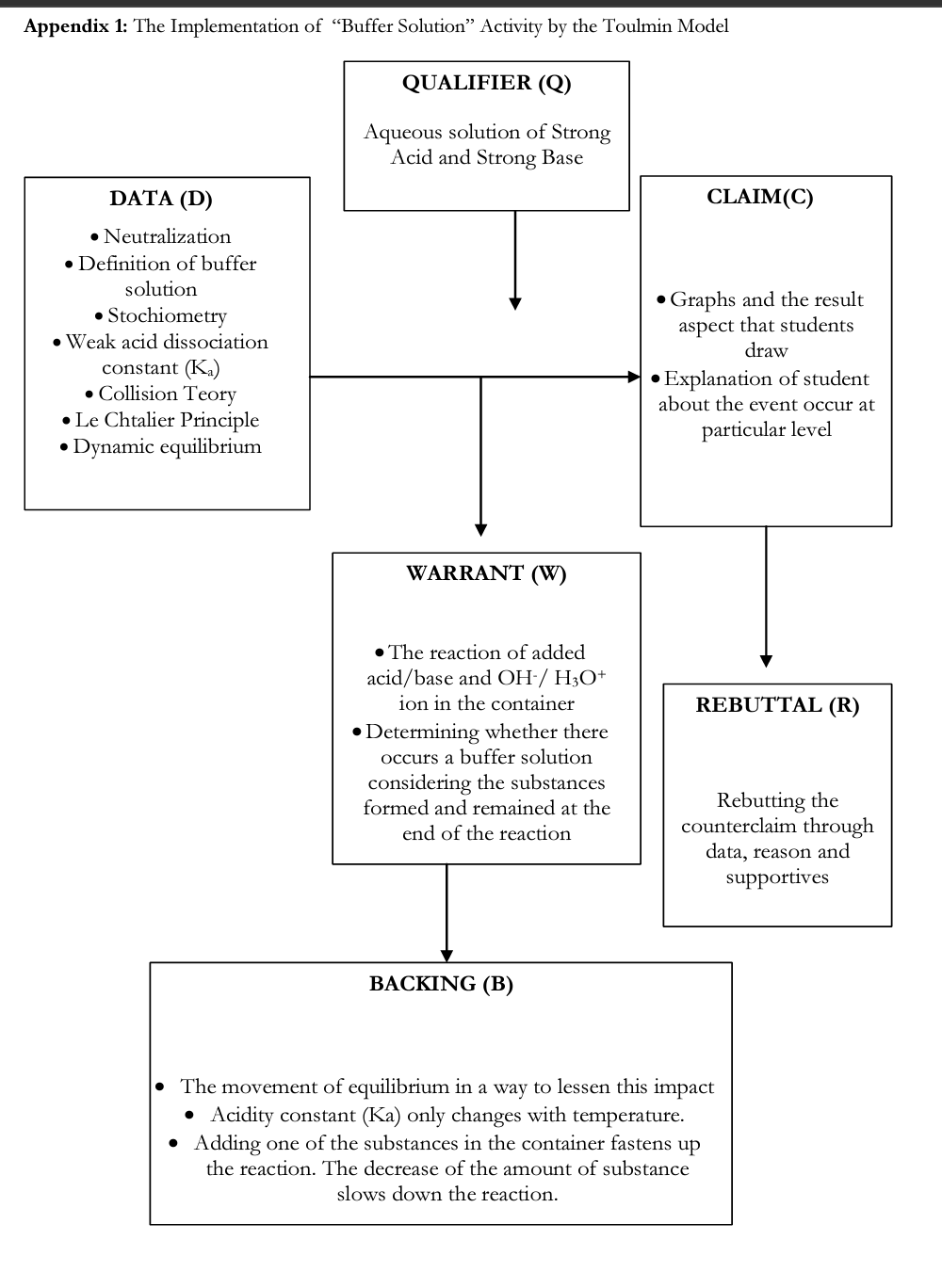

In the treatment group, students were formed into small groups of three to four that considered chemistry concepts and the suggestions of their teacher, during die instruction. Students were homogenously picked for these groups based on their success and participation rate in chemistry class. Students in the groups were changed from time to time. During the four hours of weekly class time, instruction was conducted through discussion activities in a classroom order that students formed. For die discussion activities, students were asked to constitute their claims by making use of data individually, then small-group discussions were held, and finally a classroom discussion was held. While students configured their knowledge / concepts in die scientific approach process, all discussion activities were conducted with a claim based on data and a reason explaining the relationship between (a) a claim, a warrant, and rebuttals; (b) data, a claim, a warrant, and back-ups; and (c) data, a claim, a warrant, and rebuttals in accordance with Toulmin’s (1958) discussion model. Discussions sometimes began with students’ questions during lecturing and sometimes through making use of die teacher’s questions; to begin a discussion, activities introduced in die IDEAS (Osborne, Erduran, & Simon, 2004b) packet were usually used.

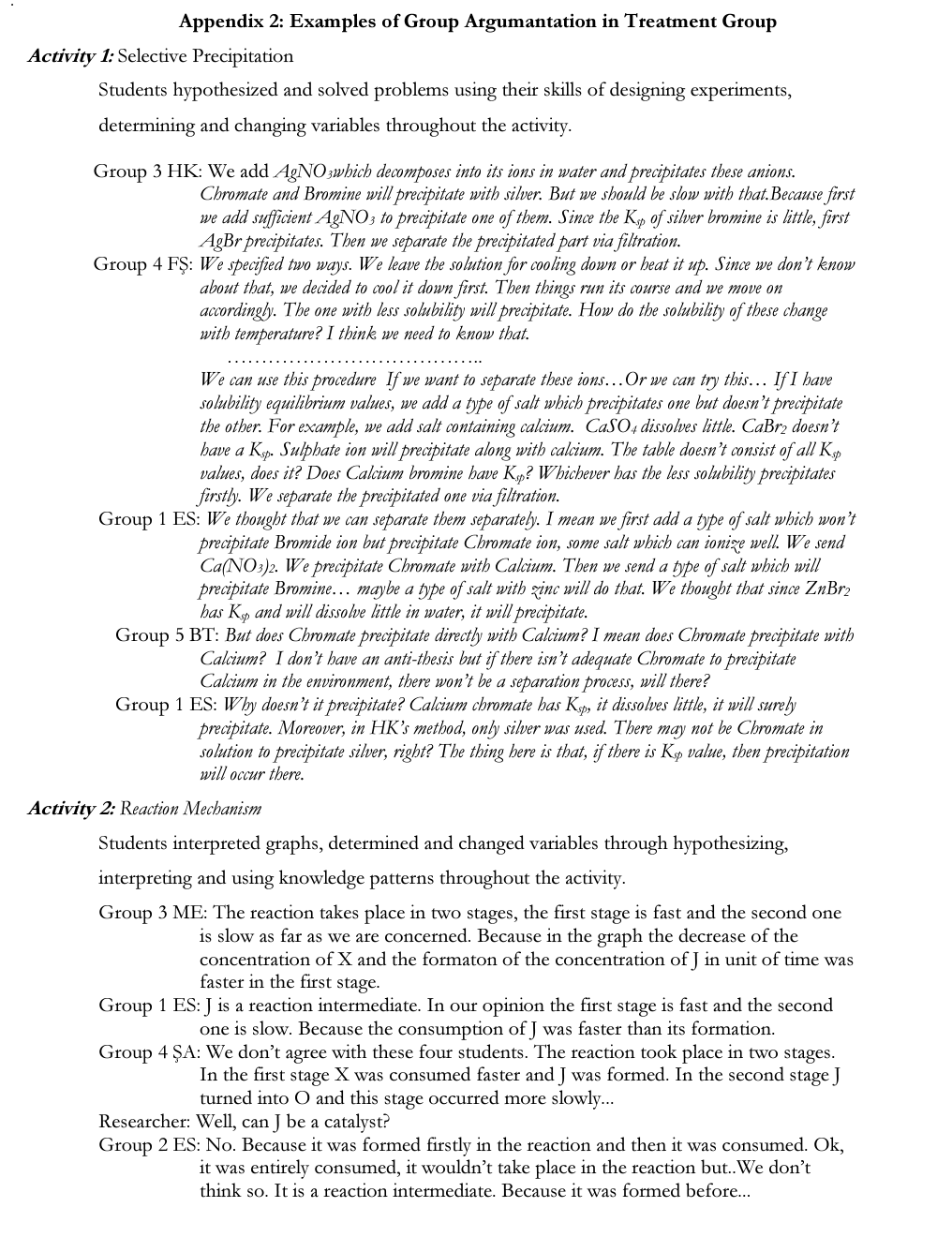

In treatment group for die reaction rate unit, 13 activities were used; for die chemical equilibrium unit, 8 were used; for die solubility equilibrium unit, 5 were used; and for die acids and bases unit, 10 were used. Examples of activities used in some of die arguments are given in Table 1.

When a certain problem was given to students (see Activity 1 in Table 1), they were asked to follow the path that scientists did or they were given ready data (e.g., graph, table, numerical values) of a conducted experiment and asked to interpret these data by building reason-result relations, obtaining results from diem, and discussing die accuracy or inaccuracy of these results (see Activity 2 in Table 1).

Activity papers were handed out to each student and they were asked to discuss diem in their own minds first. Then individual activity papers were taken back and die students were asked to study in groups of three or four.

As mentioned above about the “Buffer Solution” example, lessons with control group were mostly conducted with questions in die first place, then it moved on with teacher lecture and questions which were asked occasionally. With treatment group, as in other subjects, buffer solution was studied through activity (see Activity 3 in Table I). How class discussion was conducted by Toulmin discussion model in “Buffer Solution” activity which had been designed to be applied in “Acids and Bases” unit was detailed step by step in Appendix 1. As in other activities, in “Buffer Solution” activity, after presenting information about it, students were asked to specify their claims (hypotheses) about a problem situation.

In this step — which is die first one in scientific discussion — students are expected to structure their newly-gained knowledge through associating their prior knowledge or an incident given via data (graph, table) or story and to verify or rebut their claims. In all discussion activities, students thought as scientists and structured their knowledge/conceptions in scientific method process, moreover, die model which Toulmin built over functional relations between elements of discussion was applied.

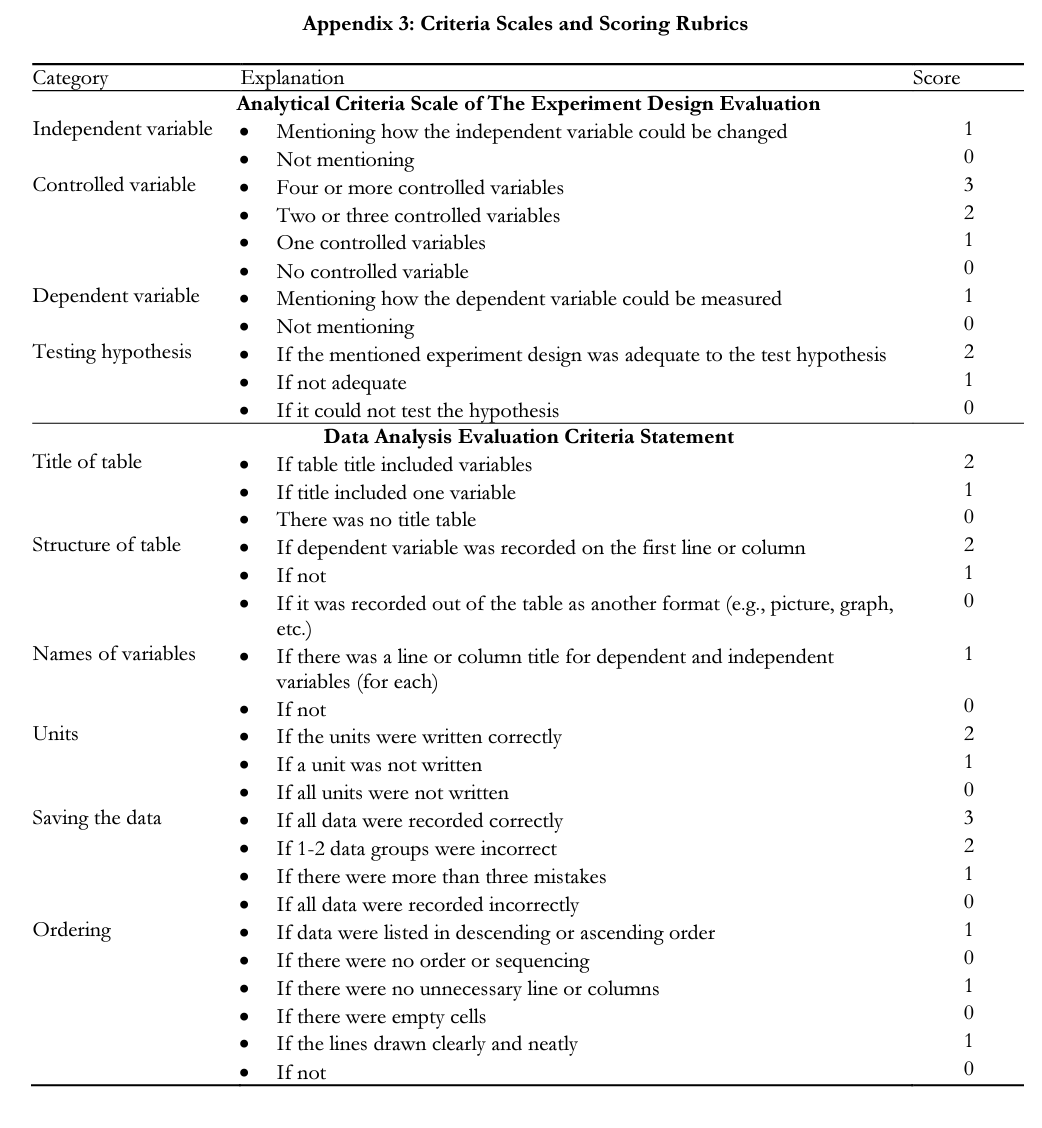

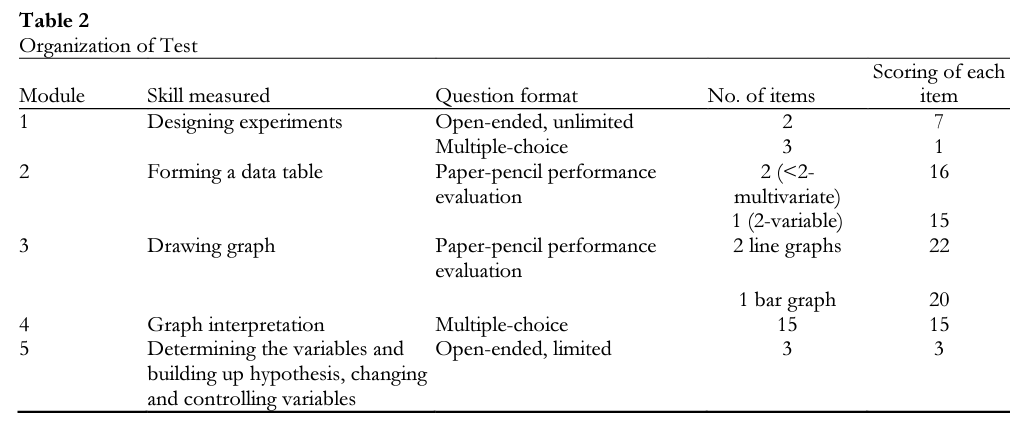

In order to determine if the students had the necessary prior knowledge and skills needed for laboratory studies, the Scientific Process Skills Scale (SPSS), which aims to measure SPS such as determining variables, hypothesizing, designing experiments, recording data, drawing graphs, reading graphs was given. SPSS was selected because the effect of teaching method on each of the students’ scientific process skills separately in detail disregarding conceptual understanding was being examined. The SPSS in this study contained questions from both the researcher and Temiz (2007), who had built a multiformat test of 171 items in five modules. The SPSS has a multiformat structure composed of five modules of 29 items that measure the improvement of determined skills (Table 2). It contains open-ended, multiple-choice, paper-pencil performance evaluation questions. The scale was applied to both the control and treatment group students as pre- and posttests. It was administered in a 90-minute period both prior to and following the instruction. The evaluation of five basic skills under the five modules in the scale is explained next (see Appendix 3 for the scoring rubrics).

Module 1 — Skills of designing experiments. In the first part of the scale, students were asked to design an experiment to test a given hypothesis and explain that designed experiment by writing or drawing; in the second part, they were asked to choose and mark the most appropriate experiment that tested the given hypothesis. The Analytical Criteria Scale of Experiment Design Evaluation (Temiz, 2007) was used to evaluate the experiments designed in the first part. Criteria in the scale were gathered in four categories: independent variable, dependent variable, controlled variables, and test of hypothesis. In the second part of the scale, each response was scored as either correct or incorrect, for a total possible score of 17 points in this module.

Module 2 — Skills of forming я data table. Findings of several experiments done beforehand were provided to students in the form of texts or drawings. They were asked to draw a data table using the data given. To evaluate their data tables, the‘Two-Variable Data Table Analytical Criteria Evaluation Scale” and the “More than Two Multivariate Data Analysis Evaluation Criteria Statement” (Temiz, 2007) were used for each question, respectively. Criteria in the scale were gathered in six categories: table title, table frame, variable names, units, data record, and arrangement. It was possible to receive a total of 47 points for this module.

Module 3 —Skills of drawing graph. Students were asked to draw graphs using the three tables provided. The first two data tables included continuous data groups; the third one included discontinuous data groups. Tine diagram was expected for continuous data whereas a bar diagram was expected for discontinuous data. The first two questions in the module were prepared by the researcher. In order to evaluate their graphs, “Tine Graphs Control Evaluation List” or “Bar Graphs Control Evaluation List” (Temiz, 2007) were used. In the scale, criteria were gathered in four categories: titles, axes, graphs, and neatness and order. It was possible to receive a total of 64 points in this module.

Module 4 — Skills of graph interpretation. Students were asked to make estimations, find maximum, minimum points and data couples, find increase-decrease tendency, make conclusions, find relations between variables, reach mathematical correlation, compare variables, and associate diagrams with the hypothesis. There were a total of 15 multiple-choice questions. Responses were scored as either correct or incorrect, with a total possible score of 15 points in this module.

Module 5 — Skills of determining the variables and building up hypothesis, changing and controlling variables. Students were asked to make hypotheses about a given problem situation and record dependent, independent, and controlled variables according to the hypothesis that they wrote. For this reason, higher-level abilities were needed to determine the variables and hypothesizing. To evaluate student answers, the “Analytical Criteria Scale of Determining Variables and Hypothesizing” (Temiz, 2007) was used. Criteria were gathered in three categories as determining all variables related with experiment, writing hypothesis, and defining variables. It was possible to receive a total of 27 points in this module. The responses of the control and treatment group (» = 34) students were evaluated by two coders independently using criteria scales and control lists. Examples of student answers in scale are given below in the qualitative results section.

The independent variable for this research is the teaching approach (scientific argumentation-centered vs. traditional), and the dependent variables are the students’ integrated scientific process subskills.

Validity and Reliability Analyses

In order to ensure the content validity of the test, three chemistry teachers and three academicians were consulted for their opinion on the following characteristics: appropriateness of questions in testing skills, number of questions and the duration for answering the questions, clarity of the language of the test, and any errors in the speculated situations of questions. The SPSS reliability analyses studies were conducted through test-retest reliability analysis containing modules with multiple-choice questions and coherence analyses between coders for modules with open-ended questions.

Test-retest reliability. The test-retest reliability of the total points of open-ended questions during two different practice periods of SPSS was evaluated via Pearson correlation analysis in two Grade 11 classes in the same school but not part of the and treatment groups. The Pearson correlation coefficient is appropriate for representing reliability, indicating the degree of common variance between measures for quantitative and continuous data (Rothstein & Echternach, 1993). The last three items of Module 1 (designing an experiment) and Module 4 (implementing graph) in which the multiple choice questions were included were administered for the second time to 34 students 6 months after the first administration. Analyses of the two sets of results indicated Pearson’s coefficients as follows: r(33) = .62, p =.000 for Module 1 and r(33) = .60,p — .000 for Module 4. Test-retest reliability was moderate for Modules 1 and 4 (0.50—0.74); the test-retest correlations of the scale were considered as a supportive finding about the reliability of the coherence of the points (Rodion et al., 2011).

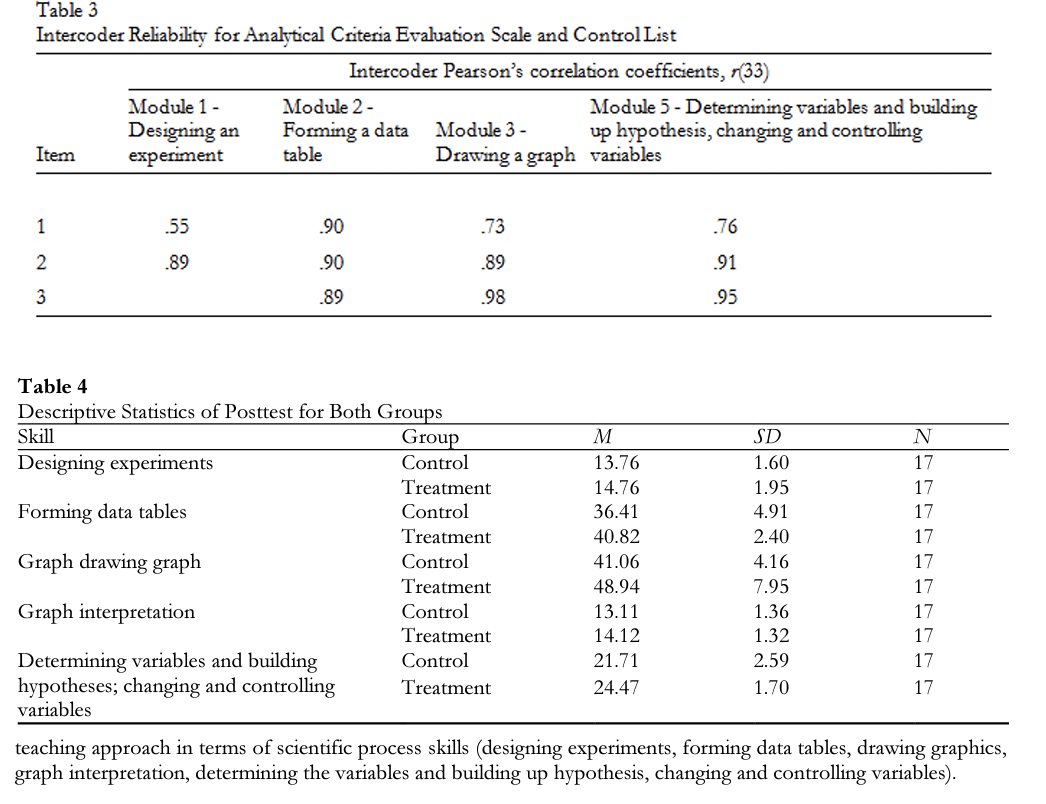

Intercoder reliability. The reliability of control lists and criteria scales designed to evaluate die modules (Modules 1, 2, 3, & 5) containing open-ended questions was tested via intercoder reliability tests. If die rating scale is continuous (e.g., 0—10), Pearson’s product moment correlation coefficient is suitable (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, 1985). The tests showed the level of agreement between two independent coders. In the modules with open-ended questions, students were asked to perform a specific task on paper. To test intercoder reliability, a question-by- question analysis was conducted and Pearson’s coefficients were computed in each subcategory item. To test intercoder reliability, die primary researcher and die second coder, a chemistry teacher, coded every transaction. The results of die analyses showed that the reliability of coherence means between coders was high and that die grading means designed for Modules 1, 2, 3, and 5 were understood die same way with high correspondence rates by different coders (Table 3). When the findings are evaluated in a general manner, it can be said that, in terms of the sample die study was carried out, die reliability of the SPSS was at acceptable levels.

DATA ANALYSES

This study used SPSS 18.0 for statistical analyses. In order to investigate the research questions, repeated /-test and multivariate analysis of covariance (MANCOVA) were used. The alpha was set at die typical .05 level. Before running analyses, homogeneity of variances assumption had been checked through Levene’s test and there were no violations for each dependent variable. As well, homogeneity of covariance assumptions was checked and the assumption was met. In this study, students’ answers and drawings were evaluated qualitatively.

RESULTS

Quantitative Analysis

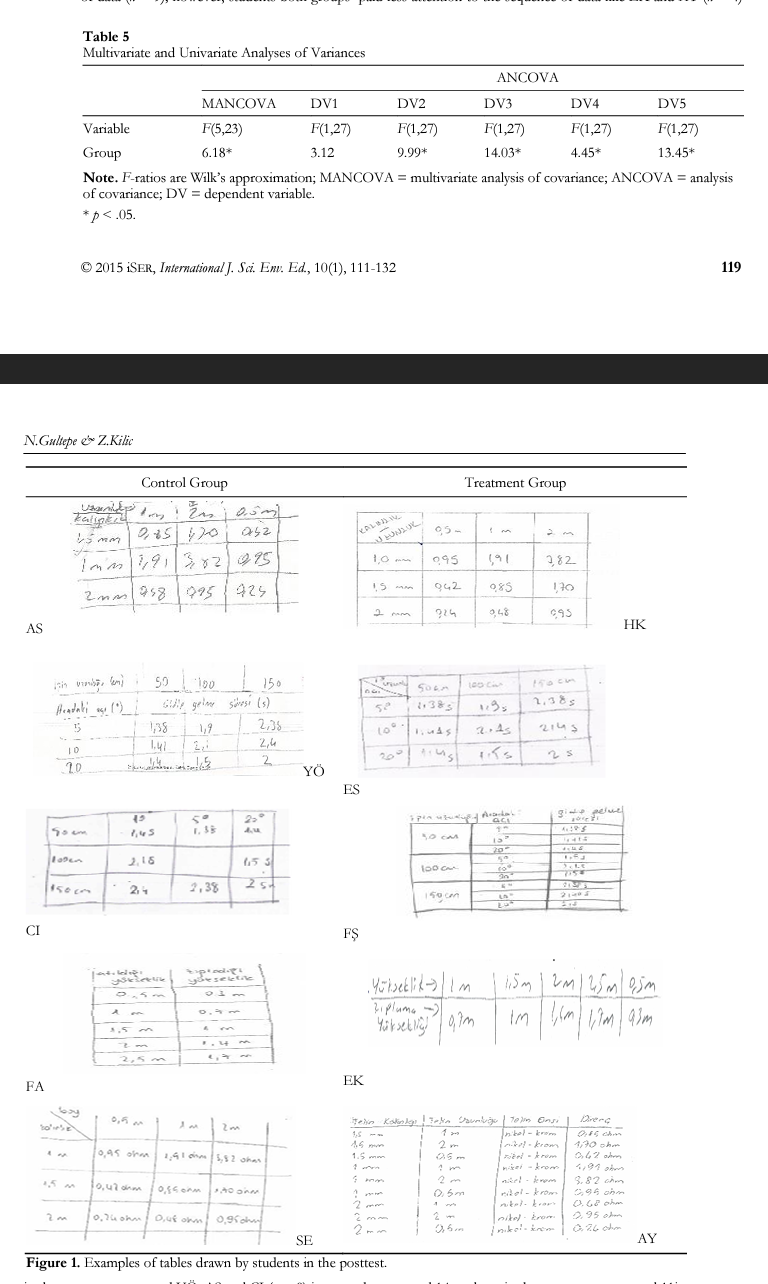

Means and standard deviations for each integrated subskills are shown in Table 4. The findings show that students in die scientific argumentation-based teaching approach had higher scores than students in die traditional teaching approach in terms of scientific process skills (designing experiments, forming data tables, drawing graphics, graph interpretation, determining the variables and building up hypothesis, changing and controlling variables).

Repeated t-test

Repeated /-test was used to determine the change in students’ scientific process skills over time. For the group, the results revealed a statistically significant increase in these students’ skill in designing experiments, /(16) = -4.79,p < .001; drawing graphs, /(16) = -4.58, p < .001; determining variables and building up hypothesis, changing and controlling variables, /(16) = -6.23, p < .001. However, there were no statistically significant differences in the students’ graph interpretation skills (p = .09) and forming data tables (p = .05) throughout the semester. For the treatment group, we found a statistically significant increase in students’ skills in designing experiments, /(16) = - 8.50, p < .001; forming data tables, /(16) = -5.24, p < .001; drawing graphs, /(16) = -6.52, p < .001; graph interpretation, /(16) = -2.59, p = .002; determining the variables and building up hypothesis, and changing and controlling variables, /(16) = -15.47,y> < .001.

Multivariate Analysis of Covariances (MANCOVA)

In order to determine if the teaching approach had any effect on students’ improvement in scientific process skills, MANCOVA analysis was applied, taking the tests under control. Since five continuous dependent variables were used in the study, one-way MANCOVA was used to determine whether there were significant differences between the treatment and control groups in terms of the following continuous dependent variables: skill in designing experiments (DV1), skill in forming data tables (DV2), skill in drawing graphs (DV3), skill in graphic interpretation (DV4), and skill in determining the variables and building up hypothesis, changing and controlling variables (DV5). The independent variable was teaching approach. The multivariate analysis of covariance showed that there was a statistically significant difference between the treatment and control groups on the combination of five dependent variables, Wilk’s lambda = .43 F(5, 23) = 6.18 p = .001, rp2 =.57, indicating that the treatment explained 57% of the variance in dependent variables. The findings suggest that a scientific argumentation-based teaching approach is more effective than a traditional teaching approach in acquiring integrated science process skills (see Table 5).

In order to examine the effect of treatment on each dependent variable controlling for the pretest scores, ANCOVA was conducted. The results revealed that the treatment (argumentation) had significant effect on the skill of forming data tables, F(l. 27) = 9.99,p — .004, rff — .27; graph drawing skills, F(l. 27) = 14.03,p — .001, rff= .34; graph interpretation skills, F(l. 27) = 4.45, p = .04, iff — .14; and skill of determining the variables and building up hypothesis, changing and controlling variables, F(l. 27) = 13.45, p — .001, rff = .33. There was no statistically significant difference for skill of designing experiments (see Table 5 for multivariate and univariate analyses of variances).

QUALITATIVE ANALYSIS

Students’ scientific process skills can be improved in time through activities although some (e.g., designing experiment subprocess skills) require more time and effort. The analyses of experiments that students designed in the SPSS show that after instruction the designing experiment subskill improved more in the treatment group. A question intending to evaluate their experiment designing skills and examples of their responses are given below.

Question: Design an experiment to test this hypothesis: “The solubility of a solid in water changes according to temperature.”

Student GA (treatmentgroup): First I chose the salt, which dissolved as an endothermic. I put 100 mb of water into a tube. I measured the temperature of the water. Then I added well-dissolving salt into this tube. I looked at the solubility of this salt at this temperature. I weighed it and added it to the water. Then I put it an ice bath and observed the precipitation. Then I weighed the mass of precipitate.

Student FA (controlgroup): Put the same amount of water into three identical containers under constant pressure. Heat the water in the first container to 25°C, the second one to 50°C, the third one to 80°C. Add the same amount of sugar into the containers. Then wait and keep on adding into the three containers the same amount of sugar to observe the dissolving process.

Both students chose the dependent variable (solubility of salt), independent variable (temperature), and controlled variable (volume of water, salt); however, FA chose pressure as a controlled variable incorrectly. Also, FA suggested adding the same amount of salt in different temperatures of water. If this was done, the amount of grams of salt dissolved could not be measured.

The analyses of student responses showed that students in both groups mentioned how the independent variable could be changed. Controlled variables were mentioned by students in both groups; however, control group students used controlled variables more accurately. For example, in the question that examines the effect of temperature on the solubility of salt, 4 students in the control group determined the type of container and pressure as the constant variable; 4 students in the control group and 9 students in the treatment group stated how the dependent variable could be measured (determined).

In Module 3, students were asked to build a data table. When data in the table were examined, it was observed that no student in either group named the table in pretests and posttests. Examples of students’ tables drawn in the posttest are shown in Figure 1. It was also noted that students in the treatment group like HK, ES and F§ (n = 13) and in control group like FA and SE drew the tables in the posttest more orderly and paid attention to the sequence of data (n = 9); however, students both groups paid less attention to the sequence of data like EK and AY (n = 4) in the reatment group and YO, AS and CI (n = 8) in control group, and 14 students in die treatment group and Ilin die control group completed die units.

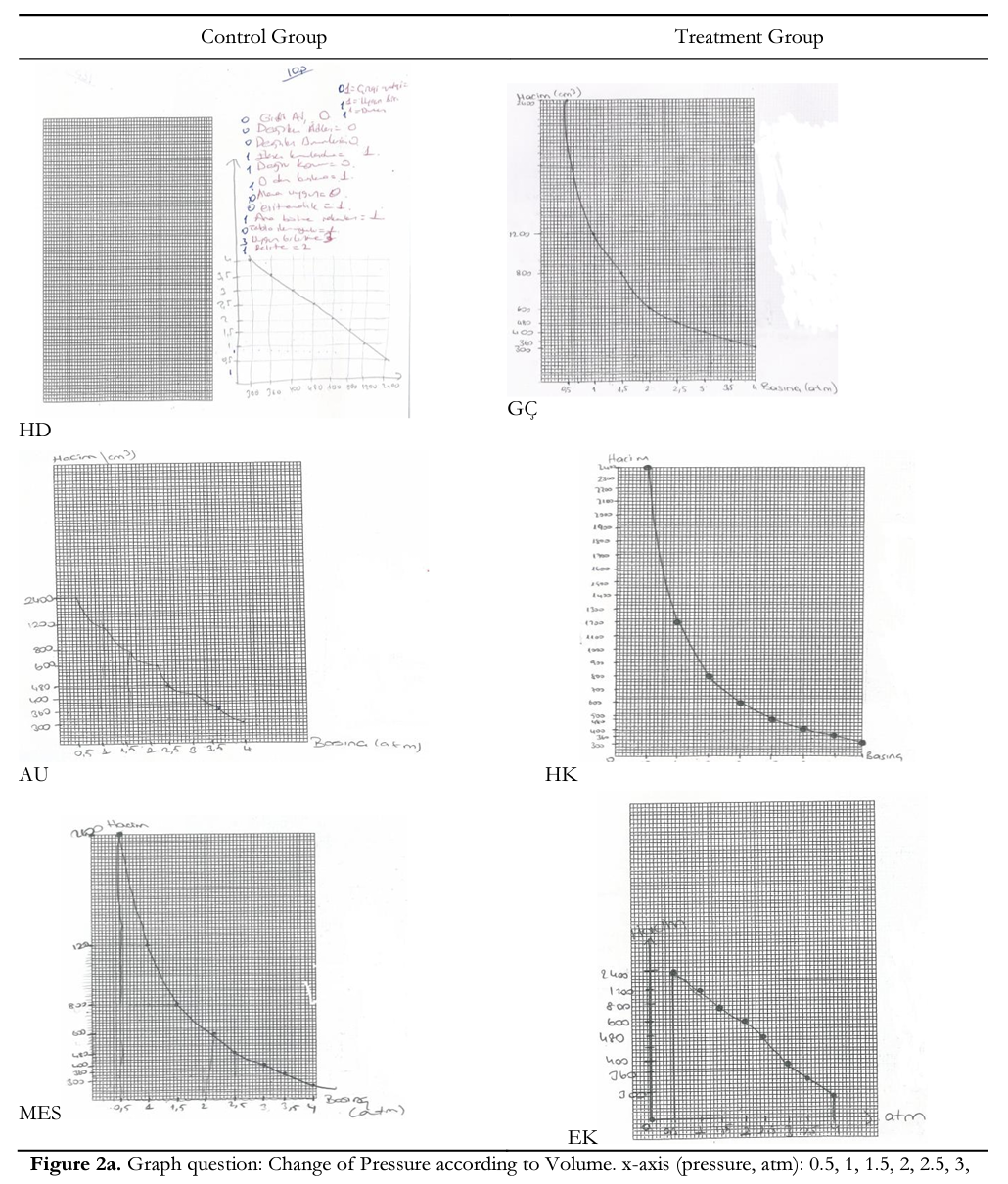

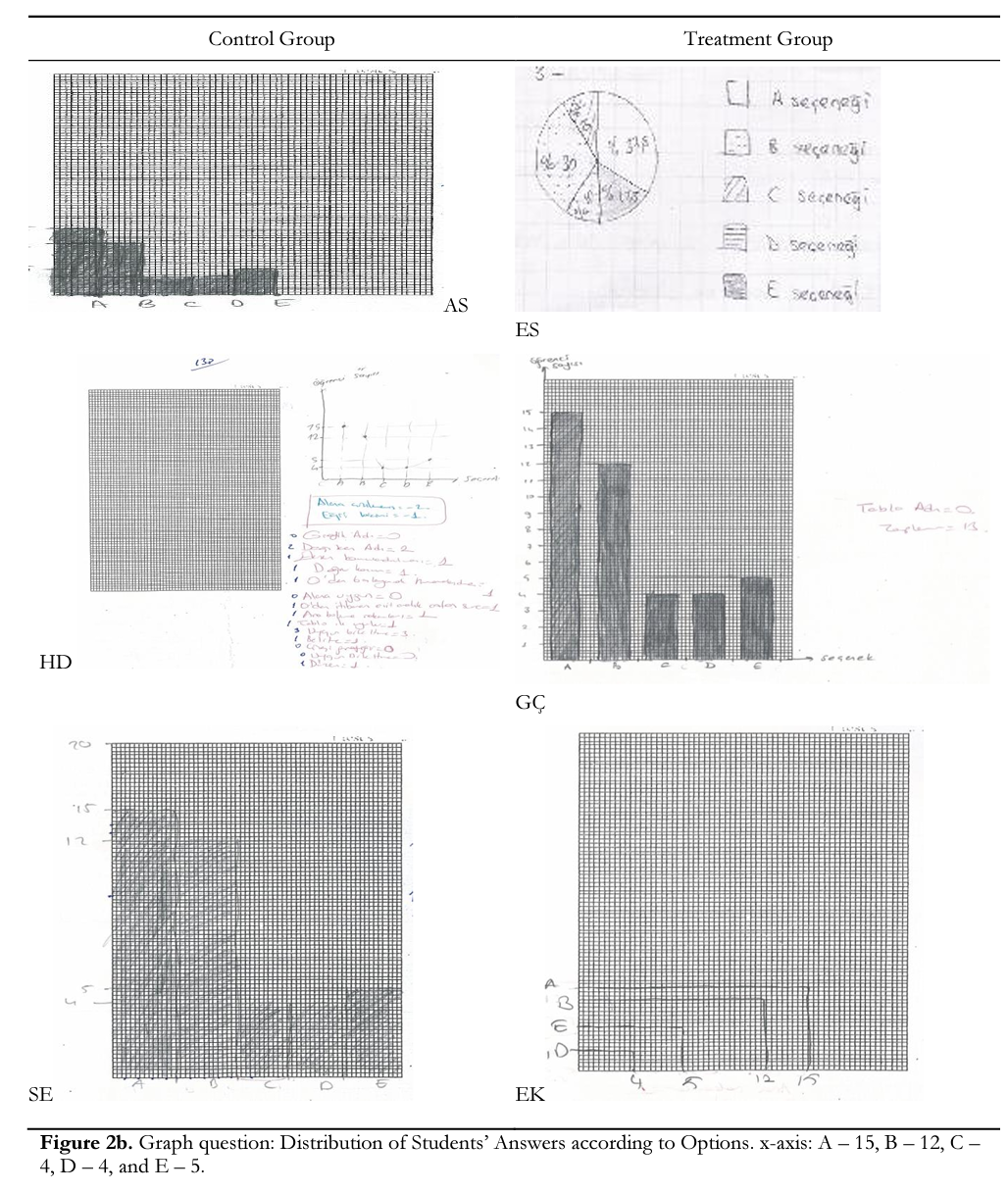

In Module 4, students were asked to draw a graph. Sample questions and students’ drawings about these questions are given in Figure 2. When the graphics were examined, some important results were seen. First, no student in either group named die table in both die pretest and posttest. Second, llstudents in die treatment group and 7 students in die control group wrote die names of variables next to x-axis and у-axis and units. For instance, AS and HD (control group) and AY and EK (treatment group) and did not write die names of die variable next to the axis (Figure 2a, 2b & 2c); and FA and HD (control group) and HK and AY (treatment group) did not write units (Figure 2a, 2b & 2c). In addition, 15 students in the treatment and 12 students in the control groups numbered axes in an ascending order in equal intervals. Third, 13 students in the treatment and 9in the control groups drew an appropriate graph format for data and placed all data pairs on the axes correctly. To illustrate, although HD (control group) and EK (treatment group) could not draw a bar graph correctly, ES (treatment group) drew a pie graph as a different form (Figure 2b). Fourth, three students in the control group and a student in the treatment group drew their graphs outside the specified space (Figures 2a, 2b, & 2c). Finally, treatment group students had higher ability compared to the control group in drawing suitable graphs (linear or curve) for data and dividing the area width of axis in the order of increase-decrease equally suitable to the covered area in the graph paper. These students reflected some details such as whether the lines in the graph were straight or curved and, for the curved graph, the direction of the linearity, for example, GQ, ZO, and HK in the treatment group and SE and MES in the control group (Figure 2a & 2c). This was an indicator that they configured concepts accurately and deeply. However, students like AU, FA, and HD (control group) and EK (treatment group) couldn’t notice this detail (Figure 2a & 2c)-

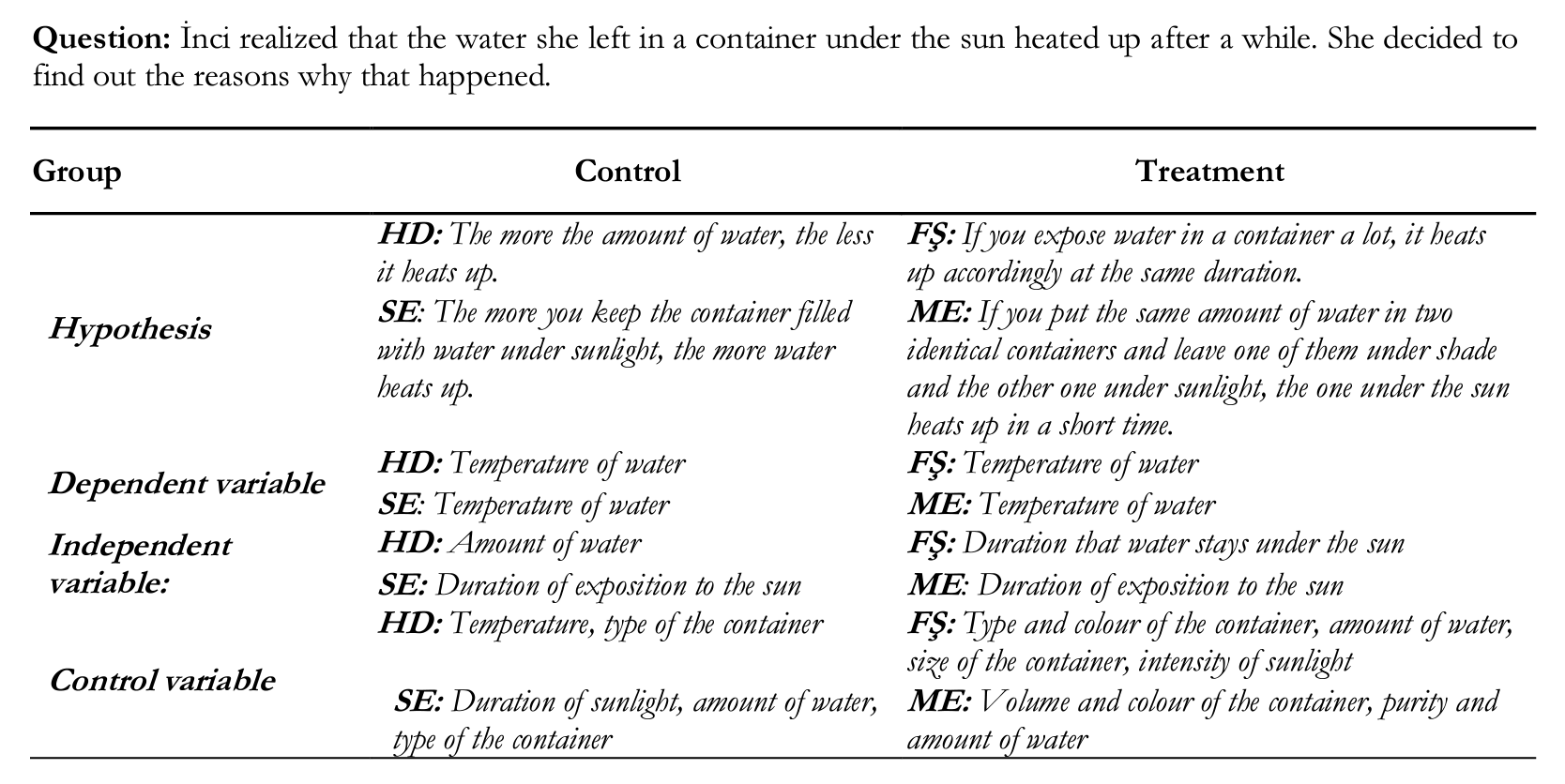

When the data in Module 5 were examined, it was seen that treatment group students had higher abilities compared to control group in determining control variables, measuring the change in the dependent variable depending on the independent variable, detecting the relationship between dependent and independent variables and designing a sufficient experiment to test a given hypothesis, and determining the variables of the situation of a given problem and the variables toward its solution. Some examples of hypotheses and variables that the students determined follow.

For example; the students in treatment group identified a great number of control variables. In accordance with the hypothesis of SE, the amount of water should be identified only as an independent variable; but she also wrote it in control variable part. Also, ME in the treatment group wrote control variables (type of container and amount of water) in hypothesis sentence.

According to these results, it can definitely be concluded that the students’ writing their claims on the activity papers with reasons in the argumentation activities contributed to their expressing the experiments and hypotheses they designed in both a written and oral format.

GENERAL DISCUSSION

Experience that individuals acquire through their lives is very important in terms of education. This experience causes one or two changes in topics such as getting information about facts, skill improvement, and conceptual understanding. In this study, both a traditional teaching approach and an argumentation -based teaching approach made contributions to die development of students’ integrated scientific process skills. However, in die argumentation activities based on causal hypothesis, students defending their claims with reasoning, supporting their claims with strong evidence-building patterns between concepts, listening to different claims and defences critically, and rebutting opposite views made greater contributions to the treatment group students’ skills of determining variables, interpreting data scientifically, and using a reason-result relation more than those of die control group. This result is in harmony with die literature finding that a scientific argumentation -based teaching approach results in better learning by students, thereby improving their scientific thinking (Driver et al., 2000; Drdur an et al., 2006; Ko ray, Koksal, Ozdemir, & Presley, 2007; Osborne et al., 2004a; Ozdemir, 2005; Simon et al., 2006; von Aufschnaiter et al., 2004).

Ensuring the acquisition of scientific process skills requires an active process (Carr, 1999; Fusco & Barton, 2001; Keys 1994; Seferoglu & Akbiyik, 2006; Yerrick, 2000). Creating a discussion environment in the classroom allows students to ask questions, revise their knowledge in the light of evidence, judge the explanations of their peers, interpret and analyse data, and consider alternative explanations.

In laboratory activities, equipment and tools were not given directly to students. They were only given a problem situation and asked to hypothesize about the solution or were given a hypothesis and asked to design an experiment to verify it, record obtained data, and assess and interpret findings. In activities in which this approach was used, students took a more active part in assessing their existing scientific models, observations, interpreting data, and forming new scientific models.

Scientific process skills can be improved in time with the help of student activities. It should be noted that the experiment-designing process skill takes more time and energy to improve. In this study, in order to complete the program, there was not enough time for students to participate in experimental activities in practical applications. Hence, some experimental activities (i.e., selective precipitation, buffer solutions) that could be performed in a laboratory to determine the effects of this teaching approach on experiment design were conducted theoretically via discussion-centered experimental activity papers. For the selective precipitation activity, students were given a problem situation and asked to suggest a method for the solution. They were given a list (table) that contained only the solubility equilibrium constant of salts. In this activity, students studied individually first. The output of this activity was really high when students used rebuttals and back-ups. It could be seen that this activity contributed greatly to the improvement of students’ developing alternative solutions to the given problem, assessing other opinions, and acquiring scientific process and problem-solving skills.

It can be said that the teaching approach based on scientific argumentation through written and oral argumentation activities had an effect on the improvement of these skills. Schafersman (1991) stated that writing activity improves organizing thoughts and thinking critically. Hence, in order to improve scientific process skills, open-ended questions should be asked in examinations inside and outside the class (Colvill & Pattie, 2003; Haden, 1999). Scientific argumentation activity improves students’ use of their existing knowledge and interactions with the concepts learned and skill of inquiry (Lazarou, 2009). In such activities, students defended their claims, evaluated others’ ideas, and presented different evidence. Actually, they supported a different shared view with different evidence, which helped them handle the situation critically, use their knowledge patterns in their defence, get meaningful learning, and consequently use the relations between variables. Also, according to

IMPLICATIONS

In science classrooms, engaging students in argumentation is not a goal of the curriculum; therefore, teachers rarely encourage students to discuss. In their study of 12 elementary school teachers’ use of argumentation in their classrooms, Simon et al. (2006) pointed out that before applying the argumentation method the teachers felt doubtful about explaining alternative concepts to students since students might strengthen concepts not in agreement with scientific truth or confuse themselves and that they felt relieved after teaching with an argumentation method. In fact, this means that teachers do not know about argumentation, which is not surprising because very few teachers have been educated about argumentation. There are an increasing number of studies putting the benefits of argumentation forth in science education; this is the reason why argumentation classes should be conducted at postsecondary institutions for future teachers. However, the number of studies that will help teachers and educators is very few. By increasing the number of these studies, contributions can be made to the educational literature. Studies on the acquisition and improvement of scientific skills have implied that school types affect study results. In this study, highly successful students took part. Control group students actively participated in classes in which chemical equilibrium and acids and bases units were studied, rather than acting as passive recipients. In the traditional approach classes, some students asked high-level questions about problems they encountered in and out of the classroom and other students gave answers or shared their observations. Similar studies can be carried out in different schools with different levels in order to obtain more objective results.

In the treatment group activities, some students were eager and inquisitive; however, because experimenting carried out in parallel with argumentation required more time than other argumentation activities, experimenting and the results were given as homework for students and the next lesson was conducted with their answers. These argumentations were not in the expected form. Kilig et al. (2010) conducted a study over one term about the effect of research, to which pre- and postlaboratory argumentations were added, on the development of scientific process skills. In this study, as the laboratory duration was not adequate, no meaningful difference was found statistically between treatment and control group students’ scientific process skills. The researchers supported their findings with Tan and Temiz’s (2003) study. In order to examine the effect of the argumentation-based teaching approach on the acquisition and development of experiment designing subskill and in order to obtain more objective results, a longer term study should be conducted. Foundation for scientific thinking ways should be built for students through long-term studies on basic scientific process skills in the first level of elementary education (Bozdogan, Tagdemir, & 1 lemirbas. 2006).

ACKNOWLEDGMENTS

The authors wish to thank Sharyl A. Yore for her technical advice and editing the manuscripts.

REFERENCES

- Atasoy, B., Akkug, H., & Kadayifgi, H. (2009). The effect of a conceptual change approach on understanding of students; chemical equilibrium concepts. Research in Science and Technological Education, 27(3) (267-282).

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1985). Standards for educational and psychological testing. Washington, DC: Authors.

- Arcidiacona, E, & Kohler, A. (2010). Elements of design for studying argumentation: The case of two on-going research lines. Cultural-Historical Psychology, 1, 65-77.

- Aydeniz, M., Pabucu, A., Qetin, P. S., & Kaya, B&. (2012). Argumentation and students’ conceptual understanding of properties and behaviors of gases. International Journal of Science and Mathematics Education, 10, 1303-1324.

- Bozdogan, A., Ta^demir, A., & Demirba:?, M. (2006). Fen bilgisi ogretiminde i^birlikli ogrenme yonteminin ogrencilerin bilimsel sureg becerilerini geligtirmeye yonelik etkisi |The impact of cooparative learning to improve students’ scientific process skills in the teaching of science]. Egitim Fakiiltesi Dergisi, 7(11), 23—26.

- Bricker, L. A., & Bell, P. (2008). Conceptualizations of argumentation from science studies and the learning sciences and their implications for the practices of science education. Science Education, 92(3), 473-498.

- Carr, M. (1999). Being a learner: Five learning dispositions for early childhood. Early Childhood Practice, 1 (1), 81—99.

- Chang, C., & Weng, Y. (2000). Exploring interrelationship between problem-solving ability and science process skills of tenth-grade earth science students in Taiwan. Chinese Journal of Science Education, 8(1), 35—56.

- Colvill, M., & Pattie, I. (2003). Science skills-the building blocks for scientific literacy. Investigating, 19(1), 21-23.

- CoKtu, B., & Unal, S. (2004). The use of worksheets in teaching Ее Chatelier’s principle. Yuyuncu Yil Universitesi Egitim Fakiiltesi Dergisi, 1 (1).

- Cross, D., Taasoobshirazi, G., Hendrick, S., & Hickey, D. (2008). Argumentation: A strategy for improving achievement and revealing scientific identities. International Journal of Science Education, 30(6), 837-861.

- Celik, A. Y. & Kilig, Z. (2014). The impact of argumentation on high school chemistry students' conceptual understanding, attitude towards chemistry and argumentativeness. Eurasian Journal of Physics and Chemistry Education, 6(1).

- Qakmakgi, G., & Teach, J. (2005, August-September). Turkish secondary and undergraduate students’ understanding of the effect of temperature on reaction rates. Paper presented at the European Science Education Research Association (ESERA) Conference, Barcelona, Spain.

- Demircioglu, G., & Yadigaroglu, M. (2011). The effect of laboratory method on high school students’ understanding of the reaction rate. Western Anatolia Jornal of Educational Science. Dokuz (Eylul Universty Instute, Izmir, Turkey ISSN 1308-8971.

- Driver, R., Newton, P., & Osborne, J. (2000). Establishing the norms of scientific argumentation in classroom. Science Education, 84(3), 287-312.

- Ebenezer, J. V., & Haggerty, S. M. (1999). Becoming a secondary school science teacher. Columbus, OH: Merrill.

- Erduran, S., Ardag, D., & Yakmaci-Guzel, B. (2006). Teaming to teach argumentation: Case study of pre-service secondary science teachers. Eurasia Journal of Mathematics, Science and Technology Education, 2(2), 1-14.

- Erduran, S., Simon, S., & Osborne, J. (2004). TAPping into argumentation: Developments in the application of Toulmin’s Argument Pattern for studying science discourse. Science Education, 88, 915—933.

- Ergiil, R., §im^ekli, Y., Qalig, S., Ozdilek, Z., Gogmengelebi, §., & §anh, M. (2011). The effects of inquiry-based science teaching on elementary school students’ science process skills and science attitudes. Bulgarian Journal of Science and Education Policy (BJSEP), 5(1), 71-81.

- Flores, G. S. (2000). Teaching and assessing science process skills in physics: The “Bubbles” task. Science Activities, 37(1), 31-37.

- Fusco, D., & Calabrese Barton, A. (2001). Re-presenting student achievement. Journal of Research in Science Teaching, 38, 337-354.

- Gultepe, N. (2010). Bilimsel tartiyma odakh ogretimin Use ogrencilerinin bilimsel siirey ve eleytirel dupinme becerilerinin gelipirilmesine etkisi [The impact of argumentation based instruction on improvement of high school students’ scientific process skill and critical thinking ability] (Unpublished doctoral dissertation). Gazi University, Ankara, Turkey.

- Gultepe, N., & Kilig, Z. (2013) Argumentation and Conceptual Understanding of High School Student on Solubility Equilibrium and Acids and Bases .Journal of Turkish Science Education, 10(4).

- Haden, W. (1999). Purposes and procedures for assessing science process skills. Assessment in Education, 6(1), 129— 144.

- Karamustafaoglu, S. (2011). Improving die science process skills ability of science student teachers using I diagrams. Eurasian J. Phys. Chem. Educ, 3(1), 26—38.

- Kaya, E. (2013). Argumentation practices in classroom: Pre-service teachers’ conceptual understanding of chemical equilibrium. Journal of Science Education, 35(7), 1139-1158.

- Kaya, E.. Erduran, S., & (Jetin, P. S. (2012). Discourse, argumentation, and science lessons: Match or mismatch between students’ perceptions and understanding? Mevlana International Journal of Education, 2(3), 1-32.

- Keys, C. W. (1994). The development of scientific reasoning skills in conjunction with collaborative writing assignments: An interpretive study of six ninth-grade students. Journal of Research in Science Peaching, 31, 1003— 1022.

- Kilig, B. G., Yildirim, E., & Metin, D. (2010). On ve son laboratuar tartigmasi eklenmi:? yonlendirilmi:? aragtirmalann bilimsel sureg becerilerinin gelitirilmesine etkisi [Effect of guided-inquiry with pre- and post- laboratory discussion on development of science process skills]. 9. VlusalStnif Ogretmenligi Egitimi Sempo-cyumu. Elazig. 310— 313.

- Kocakulah, A., & Sava$, E. (2013). Effect of the Science Process Skills Laboratory Approach Supported with Peer- Instruction on Some of Science Process Skills of Pre-service Teachers. Necatibey Egitim Fakiiltesi Elektronik Fen ve Matematik Egitimi Dergisi, 7 (2), 46-77

- Ko ray, O., Koks al, Mustafa S., Ozdemir, M. & Presley, Arzu I. (2007). The effect of creative and critical thinking based laboratory applications on academic achievement and science process skills. Elementary Education Online, 6(3), 377-389.

- Laugksch, R. C., & Spargo, P. E. (1996). Development of a pool of scientific literacy test-items based on selected AAAS literacy goals. Science Education, 80(2), 121—143.

- Lazarou, D. (2009, August-September). Eearning to TAP: An effort to scaffold students’ argumentation in science. Paper presented at the biennial conference of the European Science Education Research Association, Istanbul, Turkey.

- Lee, O., & Bradd, S. H. (1996). Literacy skills in science performance among culturally and linguistically diverse students. Science Education, 80(6), 651—671.

- Lemke, J. L. (1990). Talking science: Eanguage, learning and values. Norwood, NJ: Ablex.

- MEB (2008). Ortaogretim 10. Smif Kimya Dersi Ogretim Programi [Secondary education 10 grade chemistry curriculum]. Talim ve Terbiye Kurulu Bakanhgi. Retrieved from http://ttkb.meb.gov.tr/program2.aspx

- Nussbaum, E. M, & Sinatra, G. M. (2003). Argument and conceptual engagement. Contemporary Educational Psychological, 28, 384—395.

- Osborne, J. (2002). Science without literacy: A ship without a sail? Cambridge Journal of Education, 32(2), 203—215.

- Osborne, J., Erduran S., & Simon, S. (2004a). Enhancing the quality of argumentation in school science. Journal of Research in Science Teaching. 41(10), 994—1020.

- Osborne, J., Erduran S., & Simon, S. (2004b). Ideas, evidence and argument in science.(Inservice Training Pack). London, UK: Nuffield Foundation.

- Ostlund, K. (1998). What research says about science process skills: How can teaching science process skills improve student performance in reading, language arts, and mathematics? Electronic journal of Science Education, 4

- Onal, I. (2005). Ilkogretim fen bilgisi bgretiminde performans dayanakli durum belirleme uygulamasi iigerine bir tyahyma [A study on the application of performance based due diligence in the teaching of elementary school science] (Unpublished master’s thesis). Hacettepe University, Ankara, Turkey.

- Onal, I. (2008). Effects of constructivist instruction on the achievement, attitude, science process skills and retention in science teaching methodsII course (Unpublished doctoral dissertation). Middle East Technical University, Ankara, Turkey.

- Ozdemir, S. M. (2005). Universite ogrencilerinin ele^tirel du^iinme becerilerinin ge^itli etkenler agisindan degerlendirilmesi [The evaluation of college students’ critical thinking in terms of various factors]. Turk Egitim Bilimleri Dergisi, 3(3), 297—316.

- Ozmen, I I.. Demircioglu, G., Burhan, Y., Naseriazar, A., & Demircioglu, 11. (2012). Asia-Pacific Forum on Science Eearning and Teaching, /3(1).

- Padilla, M. Jin (1990). The science process skills. Research Matters to the Science Teacher, March 1 (No. 9004). Retrieved from http://www.narst.org/publications/research/skill.cfm

- Pekmez, E. (2010). Using analogies to prevent misconceptions about chemical equilibrium. Asia —Pacific Forum on Science Eearning and Teaching, 11 (2).

- Rezba, R. J., Sprague, C., Fiel, R. L., Funk, H. J., О key, J. R., & Jaus, H. H. (1995). Eearning and assessing science process skills. Dubuque, IA: Kendall/Hunt.

- Rodion, C., Stansfeld, S., Mathews, C., Kleinhans, A., Clark, C., Lund, C., & Flisher, A. (2011). Reliability of self report questionnaires for epidemiological investigations of adolescent mental health in Cape Town, Louth Africa. Journal of Child and Adolescent Mental Health, 23(2), 119—128.

- Rodistein, J., & Echternach, J. (1993). Primer on measurement: An introductory guide to measurement issues. Alexandria, VA: American Physical Therapy Association.

- Sampson, V., & Clark, D. B. (2011). A control of die collaborative scientific argumentation practices of two high and two low performing groups. Research in Science Education, 41 (1), 63-97.

- Sampson, V., & Gleim, L. (2009). Argument-driven inquiry to promote die understanding of important concepts and practices in biology. The American Biology Teacher, 7/(8), 165 172.

- Schafersman, S. D. (1991). An introduction to critical thinking. Retrieved from http://smartcollegeplanning.org/wp- content/uploads/2010/03/Critical-Thinking.pdf.

- Scherz, Z., Bialer, L., & Eylon, B. S. (2008). Learning about teachers’ accomplishment in ‘learning skills for science’ practice: The use of portfolios in an evidence-based continuous professional development programme. Internationaljournal of Science Education, 30(5), 643—667.

- Seferoglu, S. S., & Akbiyik, C. (2006). Ele^tirel du^unme ve ogretimi [Critical thinking and learning]. H.U. Egitim Fakiiliesi Dergisi, 30, 193—200.

- Serin, G. (2009). The effect ofproblem based learning instruction on 7th grade students’ science achievement, attitude toward science and scientific process skills (Unpublished doctoral dissertation). Middle East Technical University, Ankara, Turkey.

- Simon, S., Erduran, S., & Osborne, J. (2006). Learning to teach argumentation: Research and development in the science classroom. International Journal of Science Education, 28(2—3), 235—260.

- Skoumois, M. (2009). The effect of sociocognitive conflict on students’ dialogic argumentation about floating and sinking. International Journal of Environmental Science Education, 7(4), 381-399.

- Tan, M., & Temiz, В. K. (2003). Fen ogretiminde bilimsel sureg becerilerinin yeri ve onemi [The location and die importance of scientific process skills in teaching science]. Pamukkale Universitesi Egitim Fakiiltesi Dergisi, 1, 89— 101.

- Temiz, В. K. (2007). Figik ogretiminde ogrencilerin bilimsel siiref becerilerinin olgillmesi [The assessment of students’ scientific process skills in teaching physics] (Unpublished doctoral dissertation). Gazi University, Ankara, Turkey.

- Toulmin, S. E. (1958). The uses of argument. Cambridge, England: Cambridge University Press.

- Turpin, 1., & Cage, B. N. (2004). The effects of an integrated activity-based science curriculum on student achievement, science process skills and science attitudes. Electronic Journal of literacy Through Science, 3, 1—15.

- Van Driel, J. H., Beijaard, D., & Verloop, N. (2001). Professional development and reform in science education: The role of teachers’ practical knowledge. Research in Science Teaching, 38(2), 137—158.

- von Aufschnaiter, C., Erduran, S., & Osborne, J. (2004, April). Argumentation and cognitive processes in science education. Paper presented at die annual conference of die National Association for Research in Science Teaching, Vancouver, Canada, April. Available from http://www.narst.org/annualconference/2009_fmal_program.pdf.

- von Aufschnaiter, C., Erduran, S., Osborne, J., & Simon, S. (2008). Arguing to learn and learning to argue: Case studies of how students’ argumentation relates to their scientific knowledge. Journal of Research in Science Teaching, 45(1), 101-131.

- Yerrick, R. K. (2000). Lower track science students’ argumentation and open inquiry instruction. Journal of Research in Science Teaching, 37(8), 807—38.

- Zachos, P., Hick, T. L., Doane W.illiam Е/J., & Sargent, C. (2000). Setting theoretical and empirical foundations for assessing scientific inquiry and discovery in educational programs. Journal of Research in Science Teaching, 37(9), 938-962.